Parsing And Serializing Large Datasets Using Newline-Delimited JSON In Node.js

Yesterday, I took a look at using JSONStream as a way to serialize and parse massive JavaScript objects in Node.js without running into the memory constraints of the V8 runtime. After posting that experiment, fellow InVision App engineer - Adam DiCarlo - suggested that I look at a storage format known as "Newline-Delimited JSON" or "ndjson". In the "ndjson" specification, rather than storing one massive JSON value per document, you store multiple smaller JSON values, one per line, delimited by the newline character. To get my feet wet with this storage approach, I wanted to revisit yesterday's experiment, this time using the ndjson npm module by Finn Pauls.

Newline-delimited JSON (ndjson) is actually an approach that we (at InVision App) have been using for a long time, but I didn't realize it was a specification that had a name. ndjson is how we handle our log files - serializing and appending one log item per line to a file or streaming it to the standard output associated with the running process. Even though this format makes perfect sense for logging, it had never occurred to me to use this kind for format for storage. But, in reality, the ndjson format has several advantages over plain JSON:

- You can simply append new data to a file without first parsing the file and manipulating objects in memory.

- Parsing becomes incredibly simple - just buffering data until you find a newline character - no fussing with fancy SAXON parsers.

- The storage format works very well for piping into other processes from the command-line.

- The simplified process must logically be more performant (though I have not tested this).

That said, here's yesterday's experiment, refactored to use ndjson instead of JSONStream. Since both modules work using Transform Streams, the code is, more or less, the same: we start off with an in-memory collection which we stream to a .ndjson file. Then, we stream that file back into memory, logging items to the terminal as they become available (as "data" events):

// Require the core node modules.

var chalk = require( "chalk" );

var fileSystem = require( "fs" );

var ndjson = require( "ndjson" );

// ----------------------------------------------------------------------------------- //

// ----------------------------------------------------------------------------------- //

// Imagine that we are performing some sort of data migration and we have to move data

// from one database to flat files; then transport those flat files elsewhere; then,

// import those flat files into a different database.

var records = [

{ id: 1, name: "O Brother, Where Art Thou?" },

{ id: 2, name: "Home for the Holidays" },

{ id: 3, name: "The Firm" },

{ id: 4, name: "Broadcast News" },

{ id: 5, name: "Raising Arizona" }

// .... hundreds of thousands of records ....

];

// Traditionally, we might store ONE JSON document PER FILE. However, this has some

// serious implications once we move out of local development environment and into

// production. As the JSON documents grow in size, we run the risk of running out of

// memory (during the serialization and parsing process). To get around this, we can

// use a slightly different storage format in which our data file is not ONE JSON

// document PER FILE, but rather ONE JSON document PER LINE. This is known as "ndjson"

// or "Newline-Delimited JSON". To use this format, we're going to create an ndjson

// Transform stream (aka "through" stream) that takes each JavaScript object and

// writes it as a newline-delimited String to the output stream (which will be a

// file-output stream in our case).

// --

// NOTE: We're using .ndjson - NOT .json - for this storage format.

var transformStream = ndjson.stringify();

// Pipe the ndjson serialized output to the file-system.

var outputStream = transformStream.pipe( fileSystem.createWriteStream( __dirname + "/data.ndjson" ) );

// Iterate over the records and write EACH ONE to the TRANSFORM stream individually.

// Each one of these records will become a line in the output file.

records.forEach(

function iterator( record ) {

transformStream.write( record );

}

);

// Once we've written each record in the record-set, we have to end the stream so that

// the TRANSFORM stream knows to flush and close the file output stream.

transformStream.end();

// Once ndjson has flushed all data to the output stream, let's indicate done.

outputStream.on(

"finish",

function handleFinish() {

console.log( chalk.green( "ndjson serialization complete!" ) );

console.log( "- - - - - - - - - - - - - - - - - - - - - - -" );

}

);

// ----------------------------------------------------------------------------------- //

// ----------------------------------------------------------------------------------- //

// Since the stream actions are event-driven (and asynchronous), we have to wait until

// our output stream has been closed before we can try reading it back in.

outputStream.on(

"finish",

function handleFinish() {

// When we read the file back into memory, ndjson will stream, buffer, and split

// the content based on the newline character. It will then parse each newline-

// delimited value as a JSON object and emit it from the TRANSFORM stream.

var inputStream = fileSystem.createReadStream( __dirname + "/data.ndjson" );

var transformStream = inputStream.pipe( ndjson.parse() );

transformStream

// Each "data" event will emit one item from our original record-set.

.on(

"data",

function handleRecord( data ) {

console.log( chalk.red( "Record (event):" ), data );

}

)

// Once ndjson has parsed all the input, let's indicate done.

.on(

"end",

function handleEnd() {

console.log( "- - - - - - - - - - - - - - - - - - - - - - -" );

console.log( chalk.green( "ndjson parsing complete!" ) );

}

)

;

}

);

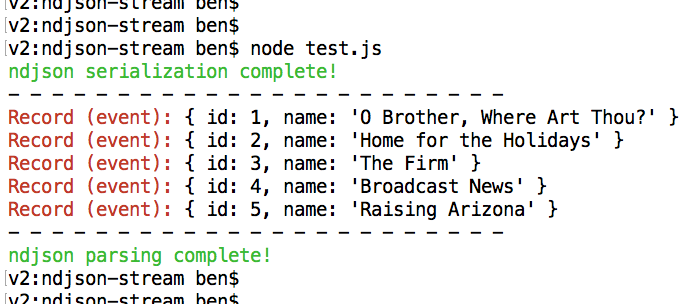

As you can see, the ndjson module is not quite a drop-in replacement for JSONStream; but, retrofitting the code to use ndjson was easy. And, when we run the above Node.js code, we get the following terminal output:

And, if we look at the actual data.ndjson file, we can see that each record in the original collection was written to its own line of the output file:

{"id":1,"name":"O Brother, Where Art Thou?"}

{"id":2,"name":"Home for the Holidays"}

{"id":3,"name":"The Firm"}

{"id":4,"name":"Broadcast News"}

{"id":5,"name":"Raising Arizona"}

I have to say, I really like the Newline-delimited JSON (ndjson) storage format. The API surface is basically the same as JSONStream; but, I think ndjson has a number of worthwhile advantages. Of course, both of these approaches use Node.js streams; so, neither approach is quite as easy as reading and writing a single JSON object to a file. But, if you need to serialize data at scale, this seems like a performant approach.

Want to use code from this post? Check out the license.

Reader Comments