The Anatomy Of An INNER JOIN Query In SQL

Seven years ago, I've talked about how I much prefer INNER JOIN clauses over WHERE clauses whenever possible within a SQL query. But, I've never exactly codified how I think about the anatomy and the structure of an INNER JOIN. Now, that's not for lack of time - that's for lack of consciousness. Until recently, I don't think I had a true strategy for INNER JOINs; my approach worked, but it lacked discipline and understanding. In the last few weeks, however, I've started to really develop a solid plan for INNER JOIN construction.

| |

|

|

||

| |

|

|

||

| |

|

|

After reading that introductory paragraph, your gut reaction may very well be something like, "Who cares - my RDMS (Relational Database Management System) performs that kind of optimization for me when it compiles the query." This may be true; it may not be. But, ultimately it's irrelevant because it misses the point - when you don't think about how your data is being used, you'll become complacent in how your data is being structured.

And, when that happens, you build slow queries that don't have to be slow.

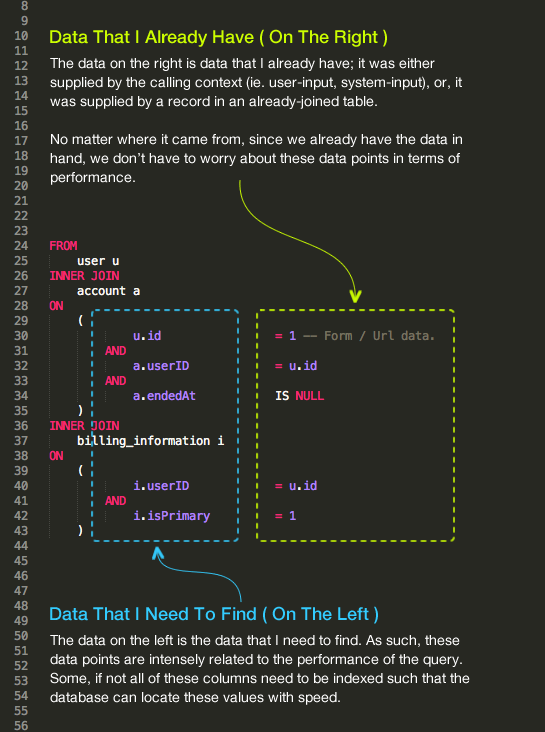

Now, when I think about INNER JOINs, I think in terms of data that I have, and data that I need. The data that I have goes on the right, the data that I need goes on the left. When I do this, I can clearly see the flow of information. And, I can more easily see where I need to apply my table indicies: on the data that I need so that the database can locate it quickly.

| |

|

|

||

| |

|

|

||

| |

|

|

With this structure, I find it very easy to see the flow of information. But, you still need to understand the volume of information that is being consumed. When you JOIN tables together, you want to put the most limiting tables higher up in the JOIN structure. This way, you minimize the data that you "have" (see above), which in turn, minimizes the data that you "need" (see above).

That's my current understanding. It's taken me a while to get here; but, I am constantly trying to better my understanding of SQL. So, if you see something here that doesn't make sense or, you have a suggestion, I am always excited to improve!

Reader Comments

Ben, while I appreciate inner joins, and admire your structure, I don't really see what it's better (or that different) than using a WHERE clause.

Values on the right seems like good style.

Indexing commonly referenced tables -> definitely good.

Moving the most limiting tables higher up, also good!

Not sure why any of these aren't true of WHERE.

INNER JOIN is nice because (last time I checked) it was harder to get Cartesian product results, but I haven't looked into that syntax in a while.

Thoughts?

Are you thinking about the RDBMS execution plan or just in terms of human being understanding?

Nice job explaining Ben. Would be interesting to get Brent Ozar or Rudy Limeback's opinion on these performance gains.

Kate, I don't have a SQL Server install handy to check the execution, but I'm 99% certain that if you're joining 2 tables through a third, say A to B to C, and you know that you only need results where C.Date IS NULL then you want to filter that out in the nested JOIN rather than in the WHERE clause for performance reasons.

WHERE filters results returned by the FROM; reducing the number of records matched in the FROM statement is better than removing them later with the WHERE statement.

A better example is that if you wanted to run a query that involved the intersection of two sets of data that were somewhat separate sections of data... like for example if you have a table of Events, Events have EventTypes and EventTypes have an attribute "Singles Only". You then have a Users table, Users have MaritalStatus, and MaritalStatus has a flag "Single". You of course also have an Attending table mapping Events to Users.

If you wanted to know if any Non-Singles were signed up to go to your Singles Only events, the right answer is not to write a single query joining these tables together by ID and then just sort it out in a where clause. Its better to get one result that is Users with UserTypes that aren't single, JOIN that result to Attending JOINED to a Select that is only selecting Events with EventTypes that are Singles Only. Formatting in that way means that the JOINs around the Attendance table aren't joining and returning users that ARE single, and aren't matching Events that AREN'T Singles Only.

That all depends on the strength of the query optimizers too... usage may vary.

@Kate,

When using "EXPLAIN" to see how the database is going to execute, I do not always see any difference between an INNER JOIN and a WHERE clause. In fact, most of the time, the execution is exactly the same.

For me, the real payoff is not in the change of plan, but rather in the change of mindset. When I use a WHERE clause, my thinking is,

"Let me join a bunch of tables together and then limit the results."

If I really work the INNER JOINs, on the other hand, however, my thinking becomes more focused,

"Let me join a bunch of tables and try to limit the cross-products as much as possible at every step of the query."

Now, again, there may not actually be any change in SQL execution; but, I find that it enhances my overall understanding of the data and about how it's used. Basically, at the very least, I find it increases my consciousness about my own code.

@Roger,

Both. Primarily, I talking about consciousness that can lead to a better understanding of how the data is being used and about how you may structure indices to provide optimal performance.

But, I have definitely found that when converting from WHERE clauses to INNER JOINs, you may change the way the query is running -- not from an optimization standpoint, but rather from a usage standpoint. Meaning, when you change the structure of the query, you can sometimes remove things you didn't need at all.

@Andy,

That would be cool. It's an interesting topic, though, because the performance gains can be from different sources (one from the database execution plan, but another from a change in understanding about the data... and all the goodness that can come from that).

From your other article: "Personally, I just like to put in the ON clause because that's the point where I think about the relationship between tables" -- Ben

From that, I think you should NOT put the "u.id = 1" part in the ON clause. It has *nothing* do with the joining of tables, and everything to do with filtering of data.

My rule of thumb: If it filters data, then put it in the WHERE clause. If it joins tables together, then put it in the ON clause. Someone looking at your queries later will understand immediately what each clause is doing.

This does *not* mean you should put:

This is very bad for performance, because the database has to do all of the JOIN'ing, and then throw away all those rows (in effect). I just optimized one of these, using a NOT EXISTS clause, and had far fewer I/O's.

I've been doing a fair bit of SQL optimisations recently, and the first thing I'll always do is move the where clauses into the join clauses. It just makes sense - why grab everything and then filter it with a where clause, when you can just filter it within the join and not deal with data youd don't need? A query I helped optimise that ran on a few million records using this strategy went from a 19 second execution to just under 12.

I think most people don't think about it simply cause Joins are complicated enough to the inexperienced without multiple conditional clauses.

@Ben,

"Now, again, there may not actually be any change in SQL execution; but, I find that it enhances my overall understanding of the data and about how it's used. Basically, at the very least, I find it increases my consciousness about my own code."

This is one of the best reasons to use JOINs over WHERE. The optimizer is usually very good at its job, which is why you don't see much difference in the ultimate execution plans of INNER JOINs vs WHEREs. But JOINs make it much clearer what data you're ultimately after. Especially in a long, multi-table query.

Plus it helps eliminate any ambiguity within your data. The worst thing in the world is to return/exclude rows that you didn't mean to and not know it. As I've commented before, getting into the habit of keeping your limiters in the INNER JOIN helps when you write OUTER JOINs. It doesn't really make much difference in INNER JOINs, but you can get VERY different behavior in an OUTER JOIN when you keep the criteria with the JOIN vs down in the WHERE clause.

I'm not a SQL guru by any stretch of the imagination. But the more I use it, the more I realize what it's capable of. And the more I see where I've done things in the past that were less than ideal. I've seen too many coders writing sub-optimal queries and not really understanding the implications of what they're writing. Every developer who interacts with data, regardless of primary language, should learn as much as they can about the flavor of SQL that they're interacting with. It's easy to get data out of a db. It's a bit more difficult to get the correct data and get it efficiently.

"But, ultimately it's irrelevant because it misses the point - when you don't think about how your data is being used, you'll become complacent in how your data is being structured."

Brilliant!

Even if you skip the rest of the post, read this line several times.

@Tom: "From that, I think you should NOT put the "u.id = 1" part in the ON clause. It has *nothing* do with the joining of tables, and everything to do with filtering of data."

I missed that. I would agree, normally I'd move that u.ID to the WHERE clause. But I see what Ben is trying to do. It should probably be changed to a.UserID = 1. That would effectively filter the Account table for only UserID 1 based off of Account.UserID and not User.ID. Subtle difference, but still a difference. You could also technically throw an AND i.UserID = 1 in the billing_information JOIN, since that query is essentially only looking for u.ID = 1 also. But this starts getting into the realm of readability vs allowing the optimizer to do it's job. Since it's an INNER JOIN, I believe the optimizer would only join on UserID 1 if that was left out of the INNER JOINs and put in the WHERE clause. I could be wrong on that though.

I'm glad you guys have a handle on how the query optimizer works, because it's a complete and utter mystery to me. I have consistently found that every time I have a notion as to how the SQL expression influences the ultimate execution plan, I've turned out to be dead wrong. In fact if you ask the optimizer guys how to layout your query for best optimization, their advice tends to boil down to "don't try - the optimizer is cleverer than you are" (or, if you convince them you really need to understand this, the advice is more like "1. Ask for a raise 2. Pour yourself a stiff drink 3. Block out the next eight months 4. etc.").

So I say layout your queries for readability, encapsulate often-used join combinations into views (I blogged about this here http://lagod.id.au/blog/?p=163) and let the optimizer do its thing.

@Tom,

When you talk about filtering vs. non-filtering vs. joining properties, I think we get a little lost in the semantics of it all. If I recall correctly, you can even join tables using the old-school syntax like:

SELECT * from tableA, tableB ....

Maybe that was just MS-Access - can't remember. Maybe that still works in modern databases. But, my point is, if you can join that way (without any column definitions), then ALL properties could be considered nothing more than "filter" conditions, and NONE of them *need* to be considered "joining" conditions.

@Matt,

Thanks :D

@Shawn, @Tom,

I look at the JOIN statements as telling a story. And, I like to read that story top-down. This makes a difference when you think about where to filter on a known id/pkey value. When I look at my current JOIN construction, I see this story:

* Get the record for user ID 1.

* Then, get the account for that user record.

* Then, get the primary billing information for that user record.

If I were to move the ID=1 filter down to the WHERE clause, the story would read very differently:

* Get ALL user records.

* Then, get the account for ALL user records.

* Then, get the primary billing information for ALL user records.

* Now, filter that list down to the user with ID=1.

To me, the first story expresses a more accurate intent.

@Jaime,

Views are something that intrigue me. Way back in the day, when I was on MS SQL Server, I used to use Views for tons of stuff - like you said, tables that were almost always being joined, or filtered in a specific way.

Every now and then, I go to read about them again; but, now that I am on MySQL, I keep reading that Views can have performance problems. But, I have not done any testing on my own. Do you have any insight into this?

I would of wrote the query the following way

FROM

(SELECT * FROM user where id=1)U

inner join

(select * from account where endedat is null)a

on

a.userID=u.id

inner join

(select * from billing_information where isPrimary =1)i

on

i.userID = u.id

Instead of the * I usually specifilly list only the columns I want to see or use.

For me this allow me to quickly look at the query and see exactly what data am pulling from each table. The on clause tells me how the tables are being combined. I find I can find errors quicker when structured this way.

Ok, *technically* SQL is based on set theory. If you have never studied SQL on a formal basis, and are interested, the C.J. Date book is *the* book on SQL.

Quick overview: in SQL, a table is really just a set of records. The SQL language is a way of specifying subsets, intersections, and unions (mathematical operations) on those sets. So, the INNER JOIN versus FROM table,table,... syntax is just a way of attaching semantics to these mathematical operations.

For me, the JOIN syntax was a way of separating the *why* of the WHERE clause; the JOIN was to "join" tables, and the WHERE was to "filter" the data. The optimizer has the unenviable job of looking at all of these and deciding which is more efficient, but the end product should be the same no matter which way it chooses to run the individual clauses.

I think this is just one of those cases where different programmers are going to do things different. I wouldn't tell you that your way is wrong or my way is right, but I definitely like my way better -- or else I would switch to yours! But, if I found code with "JOIN ... ON u.userid = 1 AND ...", I wouldn't bother to switch it around unless my changes changed the needs of the query. I understand why you are doing it, but disagree that it "makes more sense." As with most programming, there are trade-offs ... there is no 100% perfect solution.

For example, if you wanted to change from an INNER to LEFT OUTER join on table "a", how would you have to change your clauses? The "u.userid" would have to be moved outside, since it doesn't apply to the JOIN now.

Just my $0.02!

@Mark:

Have you checked those kinds of queries in an optimizer? It seems like, because of the parentheses, the optimizer would *have* to run the queries inside of the parens first, so the first one would get all user records with id=1 (fine), but the second would get all account records where endedat would be NULL ... ignoring those that belonged to user.id=1. It seems like your record sets would be large, then filtered down by the optimizer later, costing you I/O to grab records (or indexes).

Just something to look into. If the optimizer works fine, then that just seems like another way to write the same thing -- and I'm sure there are other equally good variations!

PS: Please excuse the double post, but it was replying to different people. Don't hate me! ;-)

@Tom

At least in MS SQL Server 2005 (this is what I have to use at work) it gives the exact same execution plan for all 3 methods

Yeah... After checking in SSMS, it looks like my suggestion of Nested joins is going to execute the same as joining them all and sorting it out in the WHERE clause.

That optimizer man... its pretty slick.

@Ben,

I've never used MySQL in anger - or, at least, only for Drupal and Wordpress, which is hardly relational data at all. However, I'm about to port a large system from MSSQL to MySQL, so if what you say is true I'm going to be not too happy. I do like my views. Anyway, I'll report back in a couple of month

I have to chime in here and be a dissenter. Yes, it is true, that you CAN put filtering criteria in a JOIN clause or a WHERE clause. It's also true that you can define table relationships in the WHERE clause instead of the JOIN clause, but I think just about everyone would agree that that's a bad thing at this point (although it was the method people seemed to prefer prior to SQL Server 2000).

The problem here is that you are violating the semantics of the JOIN clause by including filtering criteria in the JOIN itself. The JOIN clause has a specific purpose, and a single, clearly-defined purpose according to the SQL standard. Its purpose is to define relationships between tables and keys. And that's it. It is not intended as a mechanism for filtering data. The WHERE clause is explicitly designed for filtering data.

Think of it this way. The JOIN clause is intended to describe table relationships and nothing else. The WHERE clause is intended to define filtering criteria and nothing else. They are not semantically equivalent, even though you can abuse them both and swap parts of them interchangeably with no side effects (but only some times).

The reason that the query optimizer usually generates the same query plan regardless of where you put those criteria is because people are making incorrect assumptions about how queries are executed. SQL Server does not have a "2 pass" execution engine, where it first gathers up all of the data into one huge result set in one pass and then filters it in another. This is an easy to comprehend mental model for how it works, but it is not even close to reality. In reality, the first thing the server does is look at the databases runtime statistics to determine a query plan. If you look at a query plan, there is no "join all these tables together into one big result set" phase. In fact, there can be many, many different steps taken for each join or condition, and their order of execution is neither guaranteed, nor consistent. Sometimes the filter gets applied during the table read. Sometimes it is applied after. Sometimes it is never applied at all if the optimizer finds a better way of limiting the results. Sometimes it takes multiple operations to perform a single join, sometimes it happens in one operation. The only way to know is to look at the query plan. You will never be able to trick the query optimizer into working the way the "2 pass" mental model works, because that's just not how SQL Server works, so using that mental model is incorrect.

Also, query plans can change over time, and especially due to the volume of data and indexing on a given database. If you've got an active database, query plans can change extremely frequently. The only way to truly optimize a query is to monitor how it performs in use, and to monitor the query plans as they change. Just looking at a query plan at development time is not enough - it's a starting point, but it's not enough. This becomes even more apparent if you have a development environment that has significantly less data than your production environment. The query plans often wind up looking nothing alike.

So given that query plans are so variable, and change over time, it's not worth trying to outsmart the optimizer 99% of the time. It is going to tune and adjust plans over time, as the server gathers more statistics about how and when your data is used. Instead, you should focus on writing SQL queries that are SEMANTICALLY correct first, and then only tweaking things and violating semantics if there is a really good reason to (e.g., you know that a query is bad because you tried several different versions and looked at their query plans). But you should always start with a semantically correct query before breaking the rules.

And being semantically correct means no criteria in your JOIN clauses, and no relationships in your WHERE clauses. The fact that the criteria in the JOIN condition works does not make it the correct approach to take.

This is not to say that you should never put any criteria in the JOIN clause ever (there are very valid use cases for NULL handling, for example), but it should be a rarity, not a modus operandi. It also violates best practices.

BOL: http://technet.microsoft.com/en-us/library/ms191517(v=SQL.105).aspx

"Specifying the join conditions in the FROM clause helps separate them from any other search conditions that may be specified in a WHERE clause, and is the recommended method for specifying joins"

BOL: http://technet.microsoft.com/en-us/library/ms188047(v=sql.105).aspx

"<WHERE> Specifies the search condition for the rows returned by a DELETE, MERGE, SELECT, or UPDATE statement in SQL Server 2008 R2. Use this clause to limit the number of rows returned by or affected by the statement."

So here we see 2 things: 1) JOINs are for describing relationships and that's it. 2) WHEREs are for filtering, so don't worry about trying to filter rows in your join.

I think with SQL Server, most of what makes something work in this or that execution plan depends on the kind of join hints you aim for. I don't try and make a career out of what DBAs are for with query optimization, but every now and again I run into large volumes, especially with ERP work, that call for a very calculated approach. Usually I rely on the query optimizer to select the best execution plan (which is why the results can look the same for these kinds of queries), but there are other times when you need to enforce a "more better" join type using the OPTION clause.

I ran into one where I needed to present sales orders for specific customers attached to a salesperson client in a web ERP system.

At first blush, these two seemed the same in the execution plan (more simplified versions of what we actually needed):

SELECT IDColumn

FROM SALESORDERTABLE WHERE CustomerID IN (SELECT ID FROM CUSTOMERTABLE WHERE <selection criteria>)

vs.

SELECT IDColumn

FROM SALESORDERTABLE

INNER JOIN CUSTOMERTABLE ON SALESORDERTABLE.CustomerID = CUSTOMERTABLE.ID

What I was seeing in my execution plan was a hash join. After creating indexes on the ID column on my Customer table and an index on the lookup column on my sales order table, I would see both queries doing a single index scan on either table, but the distinction was one used a soft merge and the other a hash match.

At first blush, in my execution, the WHERE IN stacked faster with the merge join, as I expected. Still went with the INNER JOIN variant, however.

What I found, though, was on the deployed SQL Server, was that the volume of data meant that the INNER JOIN cum hash matching was forcing a whole lot of disk I/O. The client didn't have SSD, so the performance was significantly diminished compared to what I was modeling in testing. To make matters worse, we advised, before redeveloping it, for the client to bump up their memory in the server, and never bumped the max memory setting in SQL Server proper, and it was several months before we moved them to change it.

There WHERE IN ended up being largely more performant because the join type selected by optimizer availed itself of the natural physical sorting of our row keys.

Later, though, they wanted to add some kind of sorting, and that messed up the performance of a merge join, and the hash merge once again became desirable.

Of course, all of this presupposes Microsoft's query optimizer. I'm not sure if MySql supports some of those operations, particularly the merge join you'd find in Oracle or SQL Server. What I did find was that as the needs of this particular query scaled, what kind of joining or clause held the most efficacy, when depending entirely on the query optimizer, varied.

@Tom,

I can't say that I know all that much about Set theory. I remember in college, in Math 11 I was about to walk into a mid-term exam and I suddenly realized that I didn't even know the difference between a "sequence" and a "series" :D What does that have to do with Set theory? Nothing, really except the class also studied Set theory.

When it comes to anything programming-related, all I can say is that I like to have an explanation to the way I do things; otherwise, I'm just haphazardly throwing code on the page. So, like you say, its just about different developers seeing things differently; it just happens that I can (now) easily explain why I am doing things this particular way.

@Jon,

Word up! The optimizer is pretty awesome. I wish I had a strong grasp on what exactly *all* the things in the "EXPLAIN" result set indicated. I sort of get it; but, I don't always understand why it's doing things.

@Jaime,

Re: Views and performance, please take what I said with a grain of salt! I've only done light reading on matter. Plus, when someone says that there is a performance concern, you never know what "scale" they are talking about... it's like when people say that throwing Exceptions is "expensive"... then you find out its like several milliseconds more "expensive."

@Roland,

Excellent comment! First, I must say that I don't think I ever even thought about SQL from a semantic point of view. The only thing that I have ever thought about was "limit the cross product as early as possible." That said, I can say that on my Database midterm in college, I think I got a D+ :D ... ended up with B+ after the final, though... so I must have gotten better.

I don't think this was ever about out-smarting the optimizer - I think the optimizer is bananas good (and thank goodness for that!). To me, this was about just putting more thought into the query (and then, there, into the data itself).

Plus, since a WHERE clause does not indicate any order to it (meaning, that one condition is run before another), I have seen developers lose themselves in the forest of it all and end up creating queries that are slow... and again, not because the optimizer isn't doing its job - because they don't have the underlying indices that would become more obvious (to me at least) in a JOIN clause that really called out context a bit more.

I can see that the optimizer is going some cool stuff, especially when "EXPLAIN" will have something like, "Impossible WHERE condition." Clearly, its doing all kinds of good analysis before it actually starts collections record sets.

As a final note, I will definitely agree that you *have* to monitor your queries to really get at performance issues. I use something called, "Fusion Reactor." It wraps around your DB connection and does all sorts of logging and stat-collecting on the DB. It's saved my bacon a lot, seeing which queries suddenly take 13 seconds to run!

@Ben,

+1 for FusionReactor. Great product and a great company of people. We actually use all of ther products and I can't recommend them enough.

As far as "order" within clauses, that's part of my point! Order is irrelevant. You describe the relations between data and the filtering criteria as separate entities, and it's up to SQL to figure out the best order of operations. The whole intent of only describing relationships in Joins and only putting filters in Where clauses is to help make it easier for the developer to reason about the data. I mean, it should make your Joins extremely easy to write, since joins are dictated by the database design, and should be unambiguous most of the time. If a database is designed well, the Joins should be basically self documenting, through primary and foreign keys, and Joins should be verifiably correct.

One of the things we look for in code reviews is having variables present in the From clause. If you have data that can change in the From clause (anything that would require a parameterized variable), it is immediately something that gets reviewed, since it's a code smell that the developer didn't understand the database schema.

As far as indexes, one of the great things that MSSQL does when showing query plans is suggest indexes to you. I have found that these suggestions are more helpful when you eliminate filtering criteria from your Join clauses. It helps you see more clearly whether the index is being suggested because your relationships are not physially defined at the database level or whether they are being suggested because of your filtering criteria.

One last note - if you're using SSMS to write your queries, I highly recommend SQLEnlight from Ubitsoft. It's a static code analyzer for SQL queries and stored procedures. It not only checks for conformance to best practices, but also finds performance bottlenecks for you, has really great index usage analysis, and all kinds of tools to make working with queries and query plans even easier. It makes actually enforcing your company's SQL standards a cinch and is completely customizable, with tons of rules you can enable or disable straight out of the box. It does refactoring, dead code analysis, suggests performance improvements, etc. Even if you don't write queries the same way I do, it is really easy to adapt to your own standards.

@Brian,

That's a really interesting story, because a WHERE-IN is definitely something that I would typically consider a "smell" when it comes to performant SQL. Anytime I can replace a WHERE-IN with an INNER JOIN, I consider it a victory (and it usually is, in my experience).

I have read that you can give hints to the index that the JOIN should use, but I haven't really had much experience with that. I *think* I've seen a number of cases where the optimizer actually forgoes any index at all if the table size is very small... maybe I am making that up, but I think I've seen that a number of times. I always sort of assumed that there was some overhead to using an index that the optimizer figured it would actually be faster just going to the table itself.

@Roland,

I've only tried FusionReactor - I know they have "Fusion Analytics", also, but I have not tried it yet. I hear nothing but great things about everything that they make.

Actually, we currently have a scheduled task that executes some sort of stats/reporting query. I am always seeing it pop up in FR because the query takes like 150+ seconds to run. Every time I think to go in a see what is happening, I notice that the SQL (as reported in FR with no white-space) takes up like 3-4 inches of vertical space on the screen. YIKES!

It sounds like there's a lot of interesting tools that I am missing out on. I currently just hand-write my SQL in Sublime Text (my IDE). The only tool that I really know if the use of "EXPLAIN" in the query UI. But, it never suggests any indices... at least, not that I know of. Of course, my understanding of even that stuff is fairly limited to "less rows" is better than "more rows" :D

I need to take some time to see what the world of SQL tooling has to offer. Heck maybe even my GUI of choice - Navicat - may have all kinds of stuff I don't even know about.

Anyway, you've given me a lot to think about. I can definitely see the pros of trying to keep the JOIN statements as simple as possible. I'll have to think more deeply about this.

@Ben -

One last thing to add, given your last comment. I highly suggest you try writing your SQL queries in SSMS with SQL Prompt from Red Gate, and SQL Enlight from Ubitsoft. It's like a dream - full intellisense, formatting, static analysis, etc. It really makes writing SQL feel like a first class citizen instead of an implementation detail. If you've never used a fully-loaded SSMS, it will change the way you view and approach SQL, I promise.

If you want to see it in action sometime, email me offline and I can give you a 10 minute tour.

@Roland,

I just watched their animated video. Back when I used to be on a SQL Server DB, I used what I think was something like, "SQL Manager". It was dead simple - just tables and a query editor. Then, at some point, the upgrade was to something like "SQL Server Management Studio" and it was like they took the previous tool and added a gazillion features onto it. I found it totally confusing. Probably because it was a huge paradigm shift from the previous double-click-top-open-table UI in the simplified ancestor.

I appreciate the offer. I'll see if I can get my hands on the demo (will it work for MySQL and will it work on a Mac?). We'll see.

I do not like Join Clause spam.

I do not like it Sam I am.

@Mark,

Would you like them with a compound clause?

Some ANDs and ORs to bring applause.

Good job Ben Nadel. i got the concept of using joins, what i have understood so far is that we use joins for efficiency and to reduce query time..

I have looked through much of this chain and confess I have not read every comment, but so far I have not seen a reflection of my opinion.

I have been writing SQL in Ingress, Sybase, SQL Server, Oracle, and DB2 since the late 80's and the war was just ending between the SQL standard and QUEL (about the same time as the VHS/Beta-max war). What I have always liked about SQL is that it is a language that reflects the logic of set theory with a mathematical precision and a clear expression. The point was that it should be clear and readable. I love the way you like to organize your code to make it readable and easy to maintain. It is the job of the optimizer's to make it into something that runs efficiently. If SQL Server ends up with the same Query Execution Plan with either WHERE joins or ON joins that is perfect!!! Kudos to Microsoft for building an excellent optimizer. We should demand no less from any other DBMS.

I was a consultant on a Sybase project in the 90's where I had to review every stored proc (20,000 of them) before they were allowed in production and clarity was essential for SQL. We used no 'ON' clauses. I prefer not to use them since they express a preference in the set theory that is not there in reality. For instance, if you have a set of 5 tables and they join:

a - b - c - d - e, I don't want to single one out as a master table and join the rest to it. I want the join to express the rules and the optimizer can choose the optimal path.

WHERE a.a_Id = b.a_Id

AND b.b_Id = c.b_Id

AND c.c_Id = d.c_Id

AND d.d_Id = e.d_Id

AND e.statusFl = 'Y'

The other problem with the ON syntax is that is is wordy and obfuscates the logic. ( I say in this wordy response.) Any good writer of fiction or non-fiction will tell you that the best way to increase clarity is to remove any unnecessary words. "LEFT OUTER JOIN (blah blah etc. )" is a lot more wordy that "z.Z_Id *= x.Z_Id".

Yes, optimizers are often idiots. I have been using DB2 UDB lately and there is no limit to its bad decisions. DB2 does not allow hints either. Hints are available in (Sybase, SQL Server. and Oracle) when indexes are not taken up in a plan. For clarity's sake I like it when the hints are clearly called out and surrounded by parenthesis, hash marks, or exclamations so you can visually exclude them from the logic.

I like the way you think and confess I have always wanted to run similar tests to see the quality of my optimizer.

Harvey: I agree with most of what you say, but I still contend that using JOIN is better, especially when you are talking about OUTER JOIN. Putting the table-joining stuff into the ON clause for both means you don't have to worry about if you need to deal with the NULL condition; an INNER JOIN will make sure your ON clause works, an OUTER JOIN can skip records and put NULLs everywhere.

Using the *= or =* syntax has always been problematic for me, and I think it boils down to knowing which of them are needed for joining tables and which are filtering data. Yes, INNER/OUTER JOIN and ON are more wordy, but we're not talking COBOL ("move zeroes to ws-abc-123" instead of "ws-abc-123=0"). The grouping you get from the ON clause more than makes up for the few extra characters, plus you don't get accidental *= / = mismatches (where you needed to put *= but forgot and just put =, or vice versa).

Despite violating the semantics, as outlined by Roland, I think you can argue in Ben's example above, that in the query you'd want to join the primary billing_information which is identified by the billing_information table with isPrimary=1.

If I think of it like that, then it makes more sense to me to keep the isPrimary=1 clause in the on-clause.

Having a short attention span, the single path - top down - without having to look up and down between on-clause and where-clause for filtering criteria helps me understand the purpose of the join.

I think it concentrates the information where you need it.

There is also the aspect of creating queries yourself or having to understand someone else's query.

You may well be able to create a query with the where-clause mentioned above:

WHERE a.a_Id = b.a_Id

AND b.b_Id = c.b_Id

AND c.c_Id = d.c_Id

AND d.d_Id = e.d_Id

AND e.statusFl = 'Y'

And as long as you've created this yourself, you know that this is correct, and it makes perfect sense to you. But for your colleague who needs to review your query it may not be so obvious.

In order for the colleague to see if it is correct, she'll have to look at the table's aliases and see if each and everyone is joined in a logical way. Every other colleague who touches this query has to do the same.

For these kinds of queries I find myself rewriting them to Ben's style of writing.

With respect to the *= outer join style, this is sometimes ambiguous.

See: http://infocenter.sybase.com/help/topic/com.sybase.infocenter.dc32300.1570/html/sqlug/sqlug162.htm

On http://db-pub.com/forum-80491746/difference-between-and-left-outer-join.html it is stated that this style has been removed in sql server 2005.

If a query is joining multiple tables, each of which have some filter conditions, I find the clearest way to handle it is to use a WITH clause, apply filters to the individual tables, then reference the aliases in the main query. For example:

WITH

t1 AS ( SELECT * from table1 WHERE ... ),

t2 AS ( SELECT * FROM table2 WHERE ... )

SELECT ...

FROM t1

JOIN t2 ON t2.abc = t1.abc