.NET Microservices: Architecture For Containerized .NET Applications

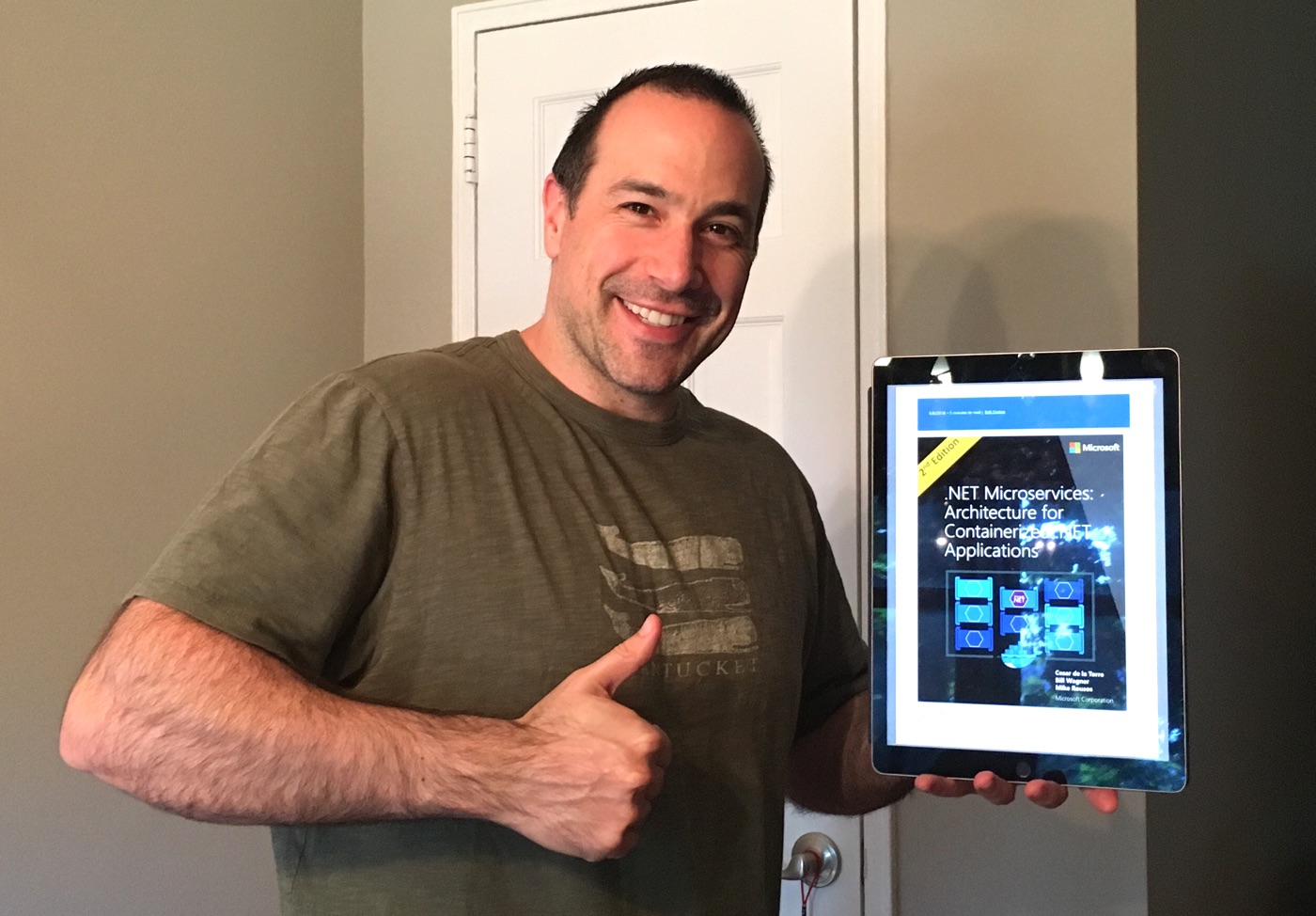

Last week at InVision, Tom Lee passed-around a few links to articles that discussed handling failure in a distributed system. These articles turned out to be chapters in a digital book published by Microsoft: .NET Microservices: Architecture For Containerized .NET Applications by Cesar de la Torre, Bill Wagner, and Mike Rousos. I don't know anything about .NET; but, I am heavily invested in understanding both microservices and containerization. So, when I saw that the book was available as a free PDF download, I loaded it into iBooks and gave it a read.

| |

|

|

||

| |

|

|

||

| |

|

|

Even as someone who hasn't looked at .NET in about 15-years, I really enjoyed this book. Half of the book is a technology-independent discussion of application and system architecture. The other half of the book is about applying said architecture practices in a .NET and Azure context. To be honest, I essentially skipped-over all the .NET-specific stuff; and, I still found loads of juicy information to consume.

The book covers a huge variety of topics on application architecture. As such, it doesn't cover any one topic is exhaustive depth. But, this makes the book easy to get through and endows it with a good pace. It's essentially a large collection of small articles, each of which takes 2-5 minutes to read.

That said, the short chapters in the book are still very thought-provoking. The topics that I found especially engaging were those on microservice size and autonomous boundary identification; domain driven design (DDD) and its application at the macro and micro levels; and, the focus on eventual consistency and the need for event-driven asynchronous communication across microservices.

Essentially, I liked all the parts of the book that made me feel like a fraud because those are the parts that I know will help me grow as an applications architect.

Having "cut my teeth" - and finding much success - in the world of Monolithic applications, it is very hard for me to wrap my head around decomposing features that were once in the same code-base. After all, those feature were all together because they were - or so I thought - needed as part of one "cohesive" application. So, while I appreciate wanting to break features apart, I have a lot of trouble finding the appropriate seams.

The book basically starts out by addressing this very issue:

Each microservice implements a specific end-to-end domain or business capability within a certain context boundary, and each must be developed autonomously and be deployable independently. Finally, each microservice should own its related domain data model and domain logic (sovereignty and decentralized data management) based on different data storage technologies (SQL, NoSQL) and different programming languages.

.... When developing a microservice, size should not be the important point. Instead, the important point should be to create loosely coupled services so you have autonomy of development, deployment, and scale, for each service. Of course, when identifying and designing microservices, you should try to make them as small as possible as long as you do not have too many direct dependencies with other microservices. More important than the size of the microservice is the internal cohesion it must have and its independence from other services. (Page 38)

.... your goal should be to get to the most meaningful separation guided by your domain knowledge. The emphasis is not on the size, but instead on business capabilities. In addition, if there is clear cohesion needed for a certain area of the application based on a high number of dependencies, that indicates the need for a single microservice, too. Cohesion is a way to identify how to break apart or group together microservices. Ultimately, while you gain more knowledge about the domain, you should adapt the size of your microservice, iteratively. Finding the right size is not a one-shot process. (Page 50)

.... It is similar to the Inappropriate Intimacy code smell when implementing classes. If two microservices need to collaborate a lot with each other, they should probably be the same microservice. (Page 193)

One thing I really liked about this book was that it made service size a bit of second-class citizen. Yes, it urged people to create small services. But, more importantly, it urged people to create "right-sized services." And, that these services can and will be broken up and re-merged over time. This feels much more healthy than the, "no service should have more than N-lines of code" dogma that I see in some microservice heuristics.

Part of my struggle in finding the "right size" of a service is likely that my monolithic architectures have tight, cross-module coupling internally. As such, it's hard to see how to break the monolith apart without making it a web of interconnected HTTP calls. Which, the more I read about, turns out to be the absolute worst of all words, combining the tight-coupling and fragility of the monolith with the complexity and latency of a distributed system:

A direct conversion from in-process method calls into RPC (Remote Procedure Calls) calls to services will cause a chatty and not efficient communication that will not perform well in distributed environments.... One solution involves isolating the business microservices as much as possible. You then use asynchronous communication between the internal microservices and replace fine-grained communication that is typical in intra-process communication between objects with coarser-grained communication. You can do this by grouping calls, and by returning data that aggregates the results of multiple internal calls, to the client. (Page 60)

It seems that asynchronous communication is really the secret sauce of a microservices architecture. In fact, after reading this book, I'm having trouble believing that a complex, distributed system can actually work any other way. At least not efficiently or with high-availability.

Ideally, you should try to minimize the communication between the internal microservices. The less communications between microservices, the better. But of course, in many cases you will have to somehow integrate the microservices. When you need to do that, the critical rule here is that the communication between the microservices should be asynchronous. That does not mean that you have to use a specific protocol (for example, asynchronous messaging versus synchronous HTTP). It just means that the communication between microservices should be done only by propagating data asynchronously, but try not to depend on other internal microservices as part of the initial service's HTTP request/response operation.

If possible, never depend on synchronous communication (request/response) between multiple microservices, not even for queries. The goal of each microservice is to be autonomous and available to the client consumer, even if the other services that are part of the end-to-end application are down or unhealthy. If you think you need to make a call from one microservice to other microservices (like performing an HTTP request for a data query) in order to be able to provide a response to a client application, you have an architecture that will not be resilient when some microservices fail. (Page 61)

At work, we've actually been talking a lot about this asynchronous communication. Specifically, about how to ensure that asynchronous integration events are propagated to other systems. The big fear, of course, is that the current process dies after changing the state of the service but before the event can be pushed to an event bus (like Kafaka).

One idea that we've been noodling-on is using an intermediary event to denote the desired state change. Then, processing that event over-and-over (ideally only once) until both the state mutation and the posting of a subsequent "integration event" are successful.

In the book, this idea was also mirrored, but with the use of a "transactional database table":

A balanced approach is a mix of a transactional database table and a simplified ES (Event Sourcing) pattern. You can use a state such as "ready to publish the event," which you set in the original event when you commit it to the integration events table. You then try to publish the event to the event bus. If the publish-event action succeeds, you start another transaction in the origin service and move the state from "ready to publish the event" to "event already published."

If the publish-event action in the event bus fails, the data still will not be inconsistent within the origin microservice - it is still marked as "ready to publish the event," and with respect to the rest of the services, it will eventually be consistent. You can always have background jobs checking the state of the transactions or integration events. If the job finds an event in the "ready to publish the event" state, it can try to republish that event to the event bus. (Page 163)

Now, to be honest, we also discussed a similar approach at work. But, the big hesitation that I had with the idea of a "transactional table" was that I thought it meant that the internal event creation had to be in the same transaction as the domain-model transaction. And, having such a broad transactional scope felt uncomfortable.

Which leads nicely into another part of the book that I really liked: the discussion of Domain Driven Design (DDD), the repository pattern, and transactional scope. According to DDD thought-leaders like Eric Evans and Vaughn Vernon, a transaction should only wrap around the creation and mutation of a single "aggregate":

Many DDD authors like Eric Evans and Vaughn Vernon advocate the rule that one transaction = one aggregate and therefore argue for eventual consistency across aggregates. For example, in his book Domain-Driven Design, Eric Evans says this (Page 232):

"Any rule that spans Aggregates will not be expected to be up-to-date at all times. Through event processing, batch processing, or other update mechanisms, other dependencies can be resolved within some specific time. (Page 128)"

On top of that, the book makes it clear that a repository abstraction should only pertain to a single aggregate:

For each aggregate or aggregate root, you should create one repository class. In a microservice based on domain-driven design patterns, the only channel you should use to update the database should be the repositories. This is because they have a one-to-one relationship with the aggregate root, which controls the aggregate's invariants and transactional consistency. It is okay to query the database through other channels (as you can do following a CQRS approach), because queries do not change the state of the database. However, the transactional area - the updates - must always be controlled by the repositories and the aggregate roots. (Page 237)

Together, these two passages (and many others not quoted) back-up the inkling that I've had for a long time that the Transaction should actually be defined inside of the repository itself. Such an approach prevents the database implementation from leaking into the "application layer". And, it prevents developers from creating too much coupling between discrete sets of data. This decoupling also paves the way for event-based synchronization, whether in the form of "domain events" or "integration events" (both of which are discussed in the book).

NOTE: On the topic of repository abstractions, I've also noodled on the ability to encapsulate uniqueness constraints within a repository in order to facilitate idempotent actions. Long story short, uniqueness constraints are well supported by a variety of databases.

Now, in an event-based synchronization system, regardless of Transactional boundaries, there's always a chance that an event will be delivered more than once. Which is why the book also emphasizes that event processing has to be designed from the start to be idempotent. Meaning, it is safe to re-process an event if any part of the workflow fails.

An important aspect of update message events is that a failure at any point in the communication should cause the message to be retried. Otherwise a background task might try to publish an event that has already been published, creating a race condition. You need to make sure that the updates are either idempotent or that they provide enough information to ensure that you can detect a duplicate, discard it, and send back only one response.

As noted earlier, idempotency means that an operation can be performed multiple times without changing the result. In a messaging environment, as when communicating events, an event is idempotent if it can be delivered multiple times without changing the result for the receiver microservice.

.... Some message processing is inherently idempotent. For example, if a system generates image thumbnails, it might not matter how many times the message about the generated thumbnail is processed; the outcome is that the thumbnails are generated and they are the same every time. On the other hand, operations such as calling a payment gateway to charge a credit card may not be idempotent at all. In these cases, you need to ensure that processing a message multiple times has the effect that you expect. (Page 169)

Apologies if I seem to be jumping around all over the place. I highlighted a lot of passages as I was reading this book; and, I've only quoted a faction of them in this review. As such, it's hard to tie them together coherently without just re-publishing the book!

Ultimately, this book really got my machinery firing. I've had a lot of thoughts swirling around in my head over the last year or so; and, I think this book really helped bring a lot of those thoughts into focus. I certainly don't have all the answers yet; but, I think this book gave me some fantastic techniques for thinking effectively about architecture questions in a microservice-based system.

Or, any system for that matter. In fact, a lot of what I read in this book would lend very well to the refactoring of a monolithic application. Perhaps that's a good way to get some hands-on experience: start refactoring the monolith that I currently work on.

In any event, I definitely recommend .NET Microservices: Architecture For Containerized .NET Applications. It's an easy read that covers a lot of interesting topics in both system design and service design. And, it's totally FREE. So, how can you argue with that!

A Few More Quotes To Give Some More Flavor

As I said earlier, I highlighted a lot of passages in the book. But, I couldn't find a way to intelligently tie them all together in the review. As such, I just wanted to close by sharing a few additional quotes that struck a chord in me.

One thing that I've always struggled with is how much a particular persistence technology leaks into higher layers of an application. I had thought that patterns like the Repository would keep a clean boundary. But, the book seems to indicate that trying to keep such a clean boundary is actually counter-productive. I'm not sure how I feel about this:

If you implemented your domain model based on POCO entity classes, agnostic to the infrastructure persistence, it might look like you could move to a different persistence infrastructure, even from relational to NoSQL. However, that should not be your goal. There are always constraints in the different databases will push you back, so you will not be able to have the same model for relational or NoSQL databases. Changing persistence models would not be trivial, because transactions and persistence operations will be very different. (Page 253)

I've always felt that people use DRY (Don't Repeat Yourself) inappropriately. As such, it was comforting to see that the book emphasized decoupling as a priority over DRYness (at least in some cases):

Just as the view model and the domain model are different, view model validation and domain model validation might be similar but serve a different purpose. If you are concerned about DRY (the Don't Repeat Yourself principle), consider that in this case code reuse might also mean coupling, and in enterprise applications it is more important not to couple the server side to the client side than to follow the DRY principle. (Page 225)

I really appreciated that the book took a pragmatic viewpoint on techniques like Domain Driven Design (DDD). And, in fact, championed the "anemic domain model" in cases where there was no significant business logic to implement.

This pragmatism was actually touted as one of the benefits of a microservices architecture - that each service could use a programming style that made sense for the kind of data and logic that it owned. Simpler services can use simpler strategies; more complex services can use more complex strategies. That's the whole point of the microservices autonomy!

Sometimes these DDD technical rules and patterns are perceived as obstacles that have a steep learning curve for implementing DDD approaches. But the important part is not the patterns themselves, but organizing the code so it is aligned to the business problems, and using the same business terms (ubiquitous language). In addition, DDD approaches should be applied only if you are implementing complex microservices with significant business rules. Simpler responsibilities, like a CRUD service, can be managed with simpler approaches. (Page 193)

.... if your microservice or Bounded Context is very simple (a CRUD service), the anemic domain model in the form of entity objects with just data properties might be good enough, and it might not be worth implementing more complex DDD patterns. In that case, it will be simply a persistence model, because you have intentionally created an entity with only data for CRUD purposes. (Page 198)

I mentioned Domain Driven Design and transactional boundaries earlier in this review. But, another thing that I wanted to point out was that the book recommends that "foreign keys" be left as simple identifiers within an aggregate (as opposed to being able to navigate internally from one aggregate to another). This aggregate isolation, combined with the concept of transactional boundaries, starts to make it really clear to me which bits of "logic" should be in the domain model vs. which bits should be in the application layer.

For example, I might be tempted to put some "role based permissions" stuff inside a low-level aggregate. But, this would be problematic because 1) the role definition does not need to be kept consistent with the other data (ie, it does not pertain to the same transactional boundary) and 2) reading the role information would require navigation through the foreign key. As such, it's a good indication that the permission logic (at least in this case) would need to be pushed up a layer, into the application logic.

You usually define an aggregate based on the transactions that you need. A classic example is an order that also contains a list of order items. An order item will usually be an entity. But it will be a child entity within the order aggregate, which will also contain the order entity as its root entity, typically called an aggregate root.

Identifying aggregates can be hard. An aggregate is a group of objects that must be consistent together, but you cannot just pick a group of objects and label them an aggregate. You must start with a domain concept and think about the entities that are used in the most common transactions related to that concept. Those entities that need to be transactionally consistent are what forms an aggregate. Thinking about transaction operations is probably the best way to identify aggregates. (Page 198)

.... The purpose of an aggregate root is to ensure the consistency of the aggregate; it should be the only entry point for updates to the aggregate through methods or operations in the aggregate root class. You should make changes to entities within the aggregate only via the aggregate root. It is the aggregate's consistency guardian, taking into account all the invariants and consistency rules you might need to comply with in your aggregate. If you change a child entity or value object independently, the aggregate root cannot ensure that the aggregate is in a valid state. It would be like a table with a loose leg. Maintaining consistency is the main purpose of the aggregate root.

.... In order to maintain separation of aggregates and keep clear boundaries between them, it is a good practice in a DDD domain model to disallow direct navigation between aggregates and only having the foreign key (FK) field... (Page 199)

One question that I've posed at work is, even with an event-driven data synchronization protocol, how do you deal with "new services" that come on-line after the application has been launched. The book touches on this problem briefly, but only to say that it should be handled asynchronously like all other inter-service communication. But, I didn't really find any concrete advice on what that actually looks like or how one might go about implementing this in a production application.

And finally (and this is where most of the issues arise when building microservices), if your initial microservice needs data that is originally owned by other microservices, do not rely on making synchronous requests for that data. Instead, replicate or propagate that data (only the attributes you need) into the initial service's database by using eventual consistency (typically by using integration events, as explained in upcoming sections).... duplicating some data across several microservices is not an incorrect design - on the contrary, when doing that you can translate the data into the specific language or terms of that additional domain or Bounded Context. (Page 62)

Anyway, I just wanted to share a few random passages to help paint a better picture of the book. Hopefully, these demonstrate the breadth and depth of the content. Like I said earlier, I found it all very inspirational while still being easy to read. But, it's clearly something that I need more hands-on experience with before some of these practices will make complete sense.

Reader Comments