Does The HTTP Response Stream Need Error Event Handlers In Node.js?

When you start programming in Node.js, one of the first things you discover is that a Node.js application is "amusingly" brittle; one uncaught error and your entire applications comes to a grinding halt. This is especially true when dealing with Streams (which inherit error-handling behavior from EventEmitter). Because of this, you often have to bind error-event handlers to every stream you use. But, what about the HTTP response stream in an HTTP server? I've never seen anyone bind an error-event handler to the one of these streams, which makes me wonder - does the HTTP response stream even need error event binding?

In the HTTP module documentation, there is one note about the HTTP server class and error events:

Event: "clientError." If a client connection emits an "error" event, it will be forwarded here.

First, this seems to only deal with the connection, not the actual stream. And, even so, it doesn't mention that any errors will be suppressed, only that they will be forwarded to a different EventEmitter. As such, I don't find this note of much practical value.

To dig a little deeper into the HTTP request / response lifecycle, I tried my hardest to come up with scenarios that would or could trigger HTTP errors. Since I am new to Node.js (and know next to nothing about networking), it's hard for me to think about all the possible ways in which an HTTP request can break. So, for this exploration, I have limited my approach to things that can be tested programmatically: timeouts, delays, and aborts.

To start, I created a simple HTTP file-server that has two special characteristics:

- It sets a 100ms timeout. This timeout [seems to] affect the idle connection timeout for both the incoming request and the outgoing response (though the documentation on this is very unclear).

- It pipes the file content through a "Through" stream, which delays each chunk by 5-seconds. I did this to make sure that response stream, itself, can't buffer all of the data immediately.

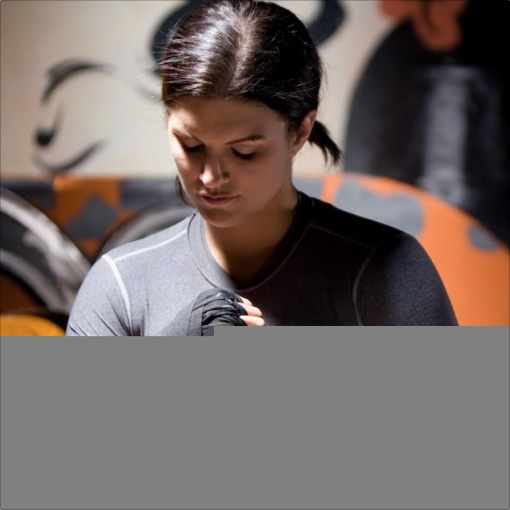

This is the image that I am serving up from the HTTP server (Gina Carano of Haywire and In the Blood fame - awesome movies!):

And, here is the actual HTTP server code:

// Require our core node modules.

var http = require( "http" );

var fileSystem = require( "fs" );

var stream = require( "stream" );

var util = require( "util" );

// ----------------------------------------------------------------------------------- //

// ----------------------------------------------------------------------------------- //

// Create an instance of our HTTP server.

var httpServer = http.createServer( handleHttpRequest );

// Attempt to set a request-timeout for incoming requests.

// --

// NOTE: When the documentation on this property is not very robust, experimentation

// indicates that this value affects the idle time for both the incoming request and

// the outgoing response.

httpServer.setTimeout( 100 );

httpServer.listen( 8080 );

// I handle the incoming HTTP request.

function handleHttpRequest( request, response ) {

console.log( ">>>> Serving request <<<<" );

// Start our response.

response.writeHead(

200,

"OK",

{

"Content-Type": "image/jpeg"

}

);

// Listen for the close and finish events on the response. Only one will fire,

// depending on how the response is handled.

response

// "close" from the documentation:

// --

// >> Indicates that the underlying connection was terminated before

// >> response.end() was called or able to flush.

.on(

"close",

function handleCloseEvent() {

console.log( "Request closed prematurely." );

}

)

// "finish" from the documentation:

// --

// >> Emitted when the response has been sent. More specifically, this event is

// >> emitted when the last segment of the response headers and body have been

// >> handed off to the operating system for transmission over the network. It

// >> does not imply that the client has received anything yet.... After this

// >> event, no more events will be emitted on the response object.

.on(

"finish",

function handleFinishEvent() {

console.log( "Request finished successfully." );

}

)

;

// Pipe the file read stream through a "Slow Through" stream instance so we can

// make sure that writing to the response doesn't happen too quickly. This will give

// us a chance to kill the request before it actually handles all the data.

fileSystem.createReadStream( "./gina-carano.jpg" )

.pipe( new SlowThrough( 5 * 1000 ) ) // 5-second delay for each chunk.

.pipe( response )

;

}

// ----------------------------------------------------------------------------------- //

// ----------------------------------------------------------------------------------- //

// I am a simple pass-through stream that delays each pass-through chunk.

function SlowThrough( delay ) {

stream.Transform.call( this );

this._delay = delay;

}

util.inherits( SlowThrough, stream.Transform );

// I pass each chunk through to the Readable stream, and then moves onto the next chunk

// after the given delay.

SlowThrough.prototype._transform = function( chunk, encoding, getNextChunk ) {

console.log( "Slowly transforming %d bytes.", chunk.length );

this.push( chunk, encoding );

// Don't move onto the next chunk right away, make them wait. Let the tension build.

setTimeout( getNextChunk, this._delay );

};

Note that I am logging two events on the response - "close" and "finish". Close indicates that the response was close prematurely and finish indicates that the response was closed successfully.

Ok, next, I created a request script that would hold the outgoing response open for 500ms, which is much greater than the 100ms timeout set in the server:

// Require our core node modules.

var http = require( "http" );

// Initiate a request to the server.

// --

// CAUTION: Look down below - we are not going to END the request (ie, indiciate that we

// have written all of the data to the outgoing request) until some future point in time.

var request = http.request(

{

method: "GET",

host: "localhost",

port: 8080

},

function handleResponse( response ) {

// When the response is readable, just keep reading.

response.on(

"readable",

function handleReadableEvent() {

var chunk = null;

while ( ( chunk = response.read() ) !== null ) {

console.log( "Chunk Received: " + chunk.length + " bytes." );

}

}

);

}

);

// Listen for any errors on the outgoing request.

request.on(

"error",

function handleRequestError( error ) {

console.log( "Request error:", error );

}

);

// Try to exceed the timeout that the HTTP server is using. In our server, setup, we are

// using a request/response timeout of 100ms of inactivity. As such, anthing over 100ms

// should trigger some sort of timeout error.

setTimeout(

function deferredEndingOfRequest() {

request.end();

},

500 // Greater than 100ms.

);

Notice that the .end() call, on the outgoing request, is delayed by 500ms. If I start the server and make the request, I get the following terminal output (for both processes):

=======================

# SERVER TERMINAL TAB #

=======================

v2:http-response ben$ node server.js========================

# REQUEST TERMINAL TAB #

========================

v2:http-response ben$ node slow-open.js

Request error: { [Error: socket hang up] code: 'ECONNRESET' }

As you can see, the server hung up on the incoming request because it didn't complete fast enough. But, notice that the server continues to run - this action did not emit an error and the sever did not crash.

Next, I tried to create a request that would open the request immediately; but, it would read from the response stream slowly and abort the request after the first readable chunk:

// Require our core node modules.

var http = require( "http" );

// Initiate a request to the server.

var request = http.request(

{

method: "GET",

host: "localhost",

port: 8080

},

function handleResponse( response ) {

// When the response is readable, wait for 200ms and then start reading from the

// response stream. Since 200ms is greater than the 100ms timeout defined by the

// server, we may get some timeout interaction.

// --

// CAUTION: This probably has no impact because the response stream is likely

// to be buffering data, from over the wire, regardless of whether or not we

// start reading from it. This may become relevant with a request length of

// significant size; but, for the small file transfer that we're using, I doubt

// it makes a difference.

response.on(

"readable",

function handleReadableEvent() {

setTimeout( readFromResponse, 200 );

}

);

// I read once from the pipe and then ABORT the request.

function readFromResponse() {

var chunk = null;

while ( ( chunk = response.read() ) !== null ) {

console.log( "Chunk Received: " + chunk.length + " bytes." );

// While not huge, the response is sufficiently large to merit more than

// one readable event. However, we're going to abort the request after

// the first read to see if this triggers an error event in the server.

request.abort();

}

}

}

);

// Immediately indicate that the outgoing response has no more data to send. This way,

// we won't have to worry about the 100ms timeout setting for the incoming request (as

// defined by our server).

request.end();

Notice that I am waiting 200ms after the "readable" event to start reading from the response. When I execute this script, we get the following terminal output:

=======================

# SERVER TERMINAL TAB #

=======================

v2:http-response ben$ node server.js

>>>> Serving request <<<<

Slowly transforming 65536 bytes.

Request closed prematurely.

Slowly transforming 43261 bytes.========================

# REQUEST TERMINAL TAB #

========================

v2:http-response ben$ node slow-close.js

Chunk Received: 65536 bytes.

We see a couple of interesting things here. First, nothing that took place crashed the server. So, there's still no obvious need to bind an error-event handler to the response stream. But, we can clearly see that the response was closed prematurely. It's unclear as to whether or not the response was closed due to the idle timeout or because the script explicitly aborted the request. But, it's also interesting that the there was no error raised from attempting to write the second chunk of file-data to the prematurely closed response.

Due to the ambiguity of the above results, in so much as why the response was closed, I decide to create a "control" request - one that would request the file, but do so in the most efficient way possible:

// Require our core node modules.

var http = require( "http" );

var fileSystem = require( "fs" );

// Initiate a request to the server.

// --

// NOTE: This is our **CONTROL** test. We are not performing any shenanigans and are

// trying to execute the request as fast as possible.

var request = http.request(

{

method: "GET",

host: "localhost",

port: 8080

},

function handleResponse( response ) {

// Simply the pipe the incoming JPEG request to a file on disk.

response.pipe( fileSystem.createWriteStream( "./output.jpg" ) );

}

);

// Indicate that the outgoing response has no more data to send.

request.end();

As you can see here, I'm making the request and then immediately streaming the response to a local file. When I execute this script, we get the following terminal output:

=======================

# SERVER TERMINAL TAB #

=======================

v2:http-response ben$ node server.js

>>>> Serving request <<<<

Slowly transforming 65536 bytes.

Request closed prematurely.

Slowly transforming 43261 bytes.========================

# REQUEST TERMINAL TAB #

========================

v2:http-response ben$ node control.js

Very interesting! We're still getting a premature closing of the response. And, now that we've removed any slowness from the request context, it's obvious that the HTTP server closed the response due to the "SlowThrough" stream that is delaying each chunk by 5-seconds (though, it appears the timeout didn't kick in until after the first chunk of data was written the response). Again, the file continues to stream through to the response stream, but the second half of the data never actually makes it across the wire. And, if I check the "output" file on disk, you can see that it is only half-rendered:

Still, however, nothing has crashed the server. But, now that I can see that the request is closing prematurely due to slowness on the server-side, I wanted to come up with a way to test slowness on the client-side. To do this, I simplified the HTTP file server, removed any delay, but switched over to using a large, 5MB Photoshop file instead of a small image JPG. The thinking here is that the previous file was small enough to be buffered inside the request even if I didn't read from it immediately.

Here is the large-file server:

// Require our core node modules.

var http = require( "http" );

var fileSystem = require( "fs" );

// ----------------------------------------------------------------------------------- //

// ----------------------------------------------------------------------------------- //

// Create an instance of our HTTP server.

var httpServer = http.createServer( handleHttpRequest );

// Attempt to set a request-timeout for incoming / outgoing requests.

// --

// NOTE: When the documentation on this property is not very robust, experimentation

// indicates that this value affects the idle time for both the incoming request and

// the outgoing response.

httpServer.setTimeout( 100 );

httpServer.listen( 8080 );

// I handle the incoming HTTP request.

function handleHttpRequest( request, response ) {

console.log( ">>>> Serving request <<<<" );

// Start our response.

response.writeHead(

200,

"OK",

{

"Content-Type": "application/octet-stream"

}

);

// Listen for the close and finish events on the response. Only one will fire,

// depending on how the response is handled.

response

// "close" from the documentation:

// --

// >> Indicates that the underlying connection was terminated before

// >> response.end() was called or able to flush.

.on(

"close",

function handleCloseEvent() {

console.log( "Request closed prematurely." );

}

)

// "finish" from the documentation:

// --

// >> Emitted when the response has been sent. More specifically, this event is

// >> emitted when the last segment of the response headers and body have been

// >> handed off to the operating system for transmission over the network. It

// >> does not imply that the client has received anything yet.... After this

// >> event, no more events will be emitted on the response object.

.on(

"finish",

function handleFinishEvent() {

console.log( "Request finished successfully." );

}

)

;

// Stream the 5.5 MB file to the response.

fileSystem.createReadStream( "./large.psd" )

.pipe( response )

;

}

Notice that I've remove the SlowThrough stream. The only feature of note is that I still have the 100ms timeout.

Then, I created a request that would delay reading from the stream for 200ms - 100ms greater than the server timeout:

// Require our core node modules.

var http = require( "http" );

// Initiate a request to the server.

var request = http.request(

{

method: "GET",

host: "localhost",

port: 8080

},

function handleResponse( response ) {

// When the response is readable, wait for 200ms and then start reading from the

// response stream. Since 200ms is greater than the 100ms timeout defined by the

// server, we may get some timeout interaction.

// --

// NOTE: Because I am attempting to transfer a large (5 MB) file, the response

// will very likely not be able to buffer it all until we start reading from the

// stream. As such, in this case (as opposed to the slow-close.js example), the

// 200ms delay here will actually have some fallout on the server-side.

response.on(

"readable",

function handleReadableEvent() {

setTimeout( readFromResponse, 200 );

}

);

// Try to read all the available content from the response.

function readFromResponse() {

var chunk = null;

while ( ( chunk = response.read() ) !== null ) {

console.log( "Chunk Received: " + chunk.length + " bytes." );

}

}

}

);

// Immediately indicate that the outgoing response has no more data to send. This way,

// we won't have to worry about the 100ms timeout setting for the incoming request (as

// defined by our server).

request.end();

When I run this request, I get the following terminal output:

=======================

# SERVER TERMINAL TAB #

=======================

v2:http-response ben$ node large-server.js

>>>> Serving request <<<<

Request closed prematurely.========================

# REQUEST TERMINAL TAB #

========================

v2:http-response ben$ node large.js

Chunk Received: 65386 bytes.

Chunk Received: 65527 bytes.

Chunk Received: 65527 bytes.

Chunk Received: 65527 bytes.

Chunk Received: 65527 bytes.

Chunk Received: 65527 bytes.

Chunk Received: 65527 bytes.

Chunk Received: 65527 bytes.

Chunk Received: 65527 bytes.

Chunk Received: 65527 bytes.

Chunk Received: 65527 bytes.

Chunk Received: 81667 bytes.

Ok, so still no server-crashing error-event on the response stream. But, this time, we see some really interesting stuff. The response closed prematurely; and, due to the current request / response configuration, we can confidently attribute that to the 200ms delay in the "readable" event which exceeded the 100ms request / response idle timeout in the server. But, even though the request closed prematurely, we can see that several hundred Kilobytes were still transferred across the stream before the back-pressure caused the response to become idle. Clearly, the request was able to buffer a good deal of file data before the response was closed.

Once I saw that a sufficiently large file transfer would get rid of possible buffering misdirection, I wanted to see what would happen if I prematurely aborted the request. So, using the same "large file" server, I set up a request that read once and then abort:

// Require our core node modules.

var http = require( "http" );

// Initiate a request to the server.

var request = http.request(

{

method: "GET",

host: "localhost",

port: 8080

},

function handleResponse( response ) {

// When the response is readable, read a bit and then abort.

response.on(

"readable",

function readFromResponse() {

var chunk = null;

while ( ( chunk = response.read() ) !== null ) {

console.log( "Chunk Received: " + chunk.length + " bytes." );

// Kill the request prematurely.

request.abort();

}

}

);

}

);

// Immediately indicate that the outgoing response has no more data to send. This way,

// we won't have to worry about the 100ms timeout setting for the incoming request (as

// defined by our server).

request.end();

When we run this request, we get the following terminal output:

=======================

# SERVER TERMINAL TAB #

=======================

v2:http-response ben$ node large-server.js

>>>> Serving request <<<<

Request closed prematurely.========================

# REQUEST TERMINAL TAB #

========================

v2:http-response ben$ node large-abort.js

Chunk Received: 65386 bytes.

The request.abort() killed the request and the server closed the response prematurely. But, still, no crashing of the sever.

While this experimentation may not be an exhaustive look at all the things that can go wrong in network programming, the results here seem to indicate that an HTTP response stream will never emit an error event; or, at the very least, that such an error event will never crash the server. So, while you have to bind an "error" event handler to just about every other streams in Node.js, it would seem that you can safely assume the HTTP response stream will never error, no matter what happens. And, that even if the response stream closes, you can continue to write to it / pipe to it without consequence.

Want to use code from this post? Check out the license.

Reader Comments