Having Fun With The SpeechSynthesis API In Angular 11.0.5

Happy New Year to all of you beautiful people! The other day while recording the Working Code podcast, my co-host Carol Hamilton mentioned a website called VoiceChanger.io, which provides a feature for synthesizing speech from text. Upon looking at the source of that page, it appears to be using something called the SpeechSynthesis API which uses your computer / device's default speech synthesis functionality to generate sound. Seeing as this is the new year, I thought I would take a morning and have some fun experimenting with this SpeechSynthesis API in Angular 11.0.5.

Run this demo in my JavaScript Demos project on GitHub.

View this code in my JavaScript Demos project on GitHub.

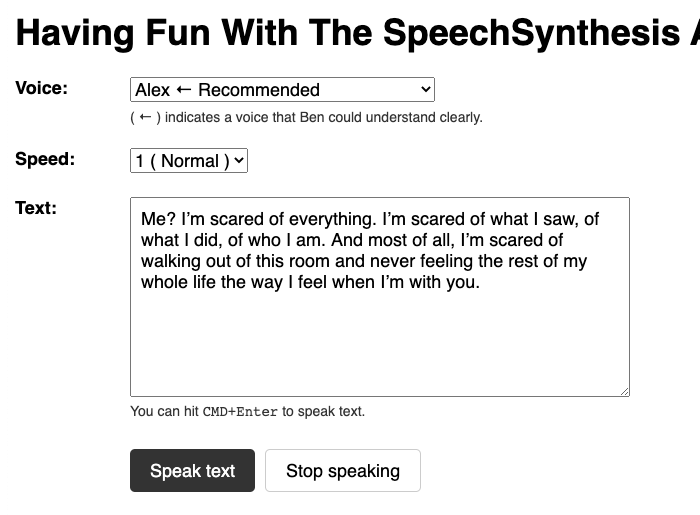

To get a sense of what this API can do, I just wanted to create a user interface (UI) that would allow me to select a voice, enter some arbitrary text, and then generate some sounds! This ended up being quite easy (not taking into account any unhappy paths in which a device doesn't support this API):

ASIDE: The default text in this demo is from Dirty Dancing (video clip), which is easily one of the best movies ever made. If you haven't seen it yet, it's a new year to get your movie education on!

To see this in action, you can either try the demo or watch the video.

Here's the code-behind for this App component - it just collects the Voices from the SpeechSynthesis API (which appear to be available asynchronously) and makes them available in the resultant form. Not all of the voices were coherent to my ear. As such, I've noted some of them as being "recommended".

// Import the core angular services.

import { Component } from "@angular/core";

// ----------------------------------------------------------------------------------- //

// ----------------------------------------------------------------------------------- //

interface RecommendedVoices {

[key: string]: boolean;

}

@Component({

selector: "app-root",

styleUrls: [ "./app.component.less" ],

templateUrl: "./app.component.html"

})

export class AppComponent {

public sayCommand: string;

public recommendedVoices: RecommendedVoices;

public rates: number[];

public selectedRate: number;

public selectedVoice: SpeechSynthesisVoice | null;

public text: string;

public voices: SpeechSynthesisVoice[];

// I initialize the app component.

constructor() {

this.voices = [];

this.rates = [ .25, .5, .75, 1, 1.25, 1.5, 1.75, 2 ];

this.selectedVoice = null;

this.selectedRate = 1;

// Dirty Dancing for the win!

this.text = "Me? ... I'm scared of everything. I'm scared of what I saw, of what I did, of who I am. And most of all, I'm scared of walking out of this room and never feeling the rest of my whole life ... the way I feel when I'm with you.";

this.sayCommand = "";

// These are "recommended" in so much as that these are the voices that I (Ben)

// could understand most clearly.

this.recommendedVoices = Object.create( null );

this.recommendedVoices[ "Alex" ] = true;

this.recommendedVoices[ "Alva" ] = true;

this.recommendedVoices[ "Damayanti" ] = true;

this.recommendedVoices[ "Daniel" ] = true;

this.recommendedVoices[ "Fiona" ] = true;

this.recommendedVoices[ "Fred" ] = true;

this.recommendedVoices[ "Karen" ] = true;

this.recommendedVoices[ "Mei-Jia" ] = true;

this.recommendedVoices[ "Melina" ] = true;

this.recommendedVoices[ "Moira" ] = true;

this.recommendedVoices[ "Rishi" ] = true;

this.recommendedVoices[ "Samantha" ] = true;

this.recommendedVoices[ "Tessa" ] = true;

this.recommendedVoices[ "Veena" ] = true;

this.recommendedVoices[ "Victoria" ] = true;

this.recommendedVoices[ "Yuri" ] = true;

}

// ---

// PUBLIC METHODS.

// ---

// I demo the currently-selected voice.

public demoSelectedVoice() : void {

if ( ! this.selectedVoice ) {

console.warn( "Expected a voice, but none was selected." );

return;

}

var demoText = "Best wishes and warmest regards.";

this.stop();

this.synthesizeSpeechFromText( this.selectedVoice, this.selectedRate, demoText );

}

// I get called once after the inputs have been bound for the first time.

public ngOnInit() : void {

this.voices = speechSynthesis.getVoices();

this.selectedVoice = ( this.voices[ 0 ] || null );

this.updateSayCommand();

// The voices aren't immediately available (or so it seems). As such, if no

// voices came back, let's assume they haven't loaded yet and we need to wait for

// the "voiceschanged" event to fire before we can access them.

if ( ! this.voices.length ) {

speechSynthesis.addEventListener(

"voiceschanged",

() => {

this.voices = speechSynthesis.getVoices();

this.selectedVoice = ( this.voices[ 0 ] || null );

this.updateSayCommand();

}

);

}

}

// I synthesize speech from the current text for the currently-selected voice.

public speak() : void {

if ( ! this.selectedVoice || ! this.text ) {

return;

}

this.stop();

this.synthesizeSpeechFromText( this.selectedVoice, this.selectedRate, this.text );

}

// I stop any current speech synthesis.

public stop() : void {

if ( speechSynthesis.speaking ) {

speechSynthesis.cancel();

}

}

// I update the "say" command that can be used to generate the a sound file from the

// current speech synthesis configuration.

public updateSayCommand() : void {

if ( ! this.selectedVoice || ! this.text ) {

return;

}

// With the say command, the rate is the number of words-per-minute. As such, we

// have to finagle the SpeechSynthesis rate into something roughly equivalent for

// the terminal-based invocation.

var sanitizedRate = Math.floor( 200 * this.selectedRate );

var sanitizedText = this.text

.replace( /[\r\n]/g, " " )

.replace( /(["'\\\\/])/g, "\\$1" )

;

this.sayCommand = `say --voice ${ this.selectedVoice.name } --rate ${ sanitizedRate } --output-file=demo.aiff "${ sanitizedText }"`;

}

// ---

// PRIVATE METHODS.

// ---

// I perform the low-level speech synthesis for the given voice, rate, and text.

private synthesizeSpeechFromText(

voice: SpeechSynthesisVoice,

rate: number,

text: string

) : void {

var utterance = new SpeechSynthesisUtterance( text );

utterance.voice = this.selectedVoice;

utterance.rate = rate;

speechSynthesis.speak( utterance );

}

}

I have no idea if these voices are some standard that will be common on other devices; but, these are the ones that are available on my MacOS.

ASIDE: You may have noticed that when you change one of the inputs, I'm generating a

saycommand. On MacOS, thesaycommand is the speech synthesis binary that, I assume, is what is feeding theSpeechSynthesisAPI. On the terminal, you can use thesaycommand using similar inputs; and, you can even generate an audio file.

Here's the HTML template for this demo:

<div class="form-field">

<label for="voice-control" class="form-field__label">

Voice:

</label>

<div class="form-field__content">

<select

id="voice-control"

name="voice"

[(ngModel)]="selectedVoice"

(change)="demoSelectedVoice(); updateSayCommand()"

class="form-field__control">

<option value="">- Select a voice -</option>

<option

*ngFor="let voice of voices"

[ngValue]="voice">

{{ voice.name }}

<ng-template [ngIf]="recommendedVoices[ voice.name ]">

← Recommended

</ng-template>

</option>

</select>

<div class="form-field__subnote">

( ← ) indicates a voice that Ben could understand clearly.

</div>

</div>

</div>

<div class="form-field">

<label for="rate-control" class="form-field__label">

Speed:

</label>

<div class="form-field__content">

<select

id="rate-control"

name="rate"

[(ngModel)]="selectedRate"

(change)="demoSelectedVoice(); updateSayCommand()"

class="form-field__control">

<option

*ngFor="let rate of rates"

[ngValue]="rate">

{{ rate }}

<ng-template [ngIf]="( rate === 1 )">

( Normal )

</ng-template>

</option>

</select>

</div>

</div>

<div class="form-field">

<label for="text-control" class="form-field__label">

Text:

</label>

<div class="form-field__content">

<textarea

id="text-control"

name="text"

[(ngModel)]="text"

(input)="updateSayCommand()"

(keydown.Meta.Enter)="speak()"

class="form-field__control"

></textarea>

<div class="form-field__subnote">

You can hit <code>CMD+Enter</code> to speak text.

</div>

</div>

</div>

<div class="form-actions">

<button

type="button"

(click)="speak()"

class="form-actions__button">

Speak text

</button>

<button

type="button"

(click)="stop()"

class="form-actions__button form-actions__button--secondary">

Stop speaking

</button>

</div>

<div *ngIf="sayCommand" class="say">

<h3 class="say__title">

On MacOS? Want to generate a sound file?

</h3>

<p class="say__description">

In the terminal, you can use the <code>say</code> binary to generate an audio

file (.aiff) using the following command:

</p>

<input

#sayRef

[value]="sayCommand"

(click)="sayRef.select()"

(focus)="sayRef.select()"

class="say__code"

/>

</div>

There's not a whole lot going on in this demo. Which, is why it's so cool that I can actually generate speech from such a simple setup! I won't go into any more detail about the SpeechSynthesis API because, frankly, I don't know any more than what I've shared here. This was just a fun exploration and a mental palette cleanser for the New Year!

Want to use code from this post? Check out the license.

Reader Comments

@All,

Another way that I use the speech synthesis command (

say) is to help me stay focused when I have long-running background tasks in the terminal:www.bennadel.com/blog/4048-pro-tip-using-the-say-voice-synthesis-command-after-a-long-running-task.htm

I can use the

&&terminal operator to run asaycommand after a compilation step to let me know the compilation is done.Good Morning there. Thank's for your blog @Ben Nadel. Please I'm try to make use text-to-speech witch ionic. How can i config it ? witch plugin should i use ? I hope you will answer me. Thank you in advance.

@Nana,

Unfortunately, I don't have any experience with Ionic. But, my understanding is that the Ionic package is just a UI framework for the web; so, I don't think it would impose any additional constraints. As such, I think you can just use the Browser's native speech synthesis API (as I have done in this blog post).

I suppose if you were running it inside of Electron or something, maybe the version of Chrome that ships with it doesn't support the speech synthesis API; but, I don't have any experience with Electron either.

@Ben,

Thank you very much for your answer. Finally I ran this code (which you posted) and it worked without issue. I figured out it was just a TypeScript item. Before, I thought I had to install plugins to make it work.

And I'm not running it on electron, just on browser. and i don't either use electron.

@Nana,

Awesome! Glad you got it working. It's a really fun little API to play with.

Thanks Ben for sharing. This works great on Chrome (Mac) and I'm able to change the voice to French. However, on iOS devices, the voices are completely different and I don't find the French ones. Any clue?

@Xavier,

Unfortunately, I don't know. From what I saw on some Googling, iOS devices seem to be inconsistent. The voices must be system-specific and for whatever reason Apple has different ones on Mac vs. Phone. Sorry I don't have any better insight.

@Ben,

Thank your for the reply.

I did find the voices on iOS but the array is completely different. The same voice is 4 on Mac and 89 on iOS! iOS has many more voices by the way.

I will have to detect the device to adjust the voice accordingly.

@Xavier,

Ahh, good to know 💪 Thanks for testing on the phones for us!