I Wish JavaScript Had A Way To Map And Filter Arrays In A Single Operation

For years, I used jQuery to build my web applications. And, no matter what anyone says, jQuery was and is awesome. One of the features that I miss most (now that I use other frameworks) is the intelligence that jQuery built into its mapping and iteration functions. Breaking out of the .each() or flattening results of the .map() call are just two ways in which jQuery built "common use case" patterns into its library. I miss this in so-called "vanilla" JavaScript. One of the most common use-cases that I wish JavaScript handled was the ability to map and filter an Array in a single operation.

Obviously, JavaScript presents both a .map() method and a .filter() method on the Array instance (via the Array prototype); so, it's easy to chain a .filter() call after a .map() call. But, filtering the results of a mapping operation seems like such a common use-case that it would be nice to have the filtering aspects of the use-case implicitly enacted behind the scenes.

People often complain about the fact that JavaScript has both a "null" and an "undefined" value. But, I think we can use this dichotomy to our advantage. For example, we could say that if a mapping operation returned an "undefined" value, then the value should be omitted from the final result:

// I map the current collection onto another collection using the given operator.

// Undefined products of the operation will automatically be removed from the final

// results (filter value 'undefined' can be overridden).

Array.prototype.mapAndFilter = function(

operator,

valueToFilter = undefined,

context = null

) {

var results = this

.map( operator, context )

.filter(

( value ) => {

return( value !== valueToFilter );

}

)

;

return( results );

};

// ----------------------------------------------------------------------------------- //

// ----------------------------------------------------------------------------------- //

var values = [ "a", "b", "c", "d", "e", "f", "g" ];

// Map values onto upper-case version, but exclude the vowels.

var result = values.mapAndFilter(

( value ) => {

// Exclude vowels. Skipping the explicit return for vowels will implicitly

// return "undefined". This will, in turn, cause this iteration result to be

// omitted from the resultant collection.

if ( ! "aeiouy".includes( value ) ) {

return( value.toUpperCase() );

}

}

);

console.log( "VALUES:", values );

console.log( "RESULT:", result );

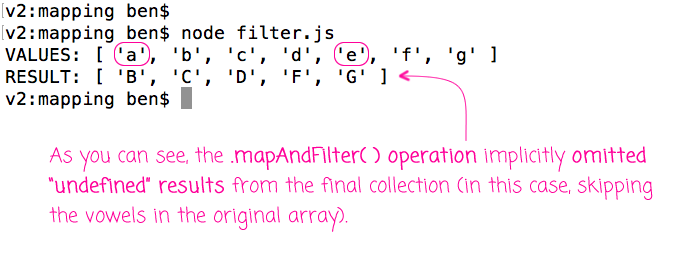

Here, I am augmenting the Array prototype to include a .mapAndFilter() method. Under the hood, the method does exactly that - it maps and then it filters. Only, the filtering is done automatically based on "undefined" results of the mapping operation. To test this, I'm mapping an array of lower-case values onto an array of upper-case values with the vowels excluded. And, when we run this through node.js, we get the following terminal output:

As you can see, when our mapping function iterated over a vowel, it implicitly returned undefined by skipping the return statement. The .mapAndFilter() operation then omitted that undefined result from the final collection.

In JavaScript, we can accomplish this is any number of ways, from augmenting the core Prototype chain (as I did) to implementing custom libraries. So, this post isn't about the lack of opportunity. Rather, it's just me missing some of the intelligence that jQuery brought to the table. Perhaps I am just being nostalgic today.

Want to use code from this post? Check out the license.

Reader Comments

I didn't read (yet!), but is this what you want? http://2ality.com/2017/04/flatmap.html

@Ray,

Yeah, Axel's article is very interesting. You could definitely implement the same thing with flatMap() by essentially returning the empty array [] when you want to filter OUT the result. That's actually quite clever. Looks like they are proposing this for the future. I hope it lands.

@All,

On Twitter, Pete Schuster made a good point - if you chain .map() and .filter(), you have to iterate over the collection twice. If you use .reduce(), you could do the mapping and the filtering in one iteration. Of course, in my approach, since the logic is encapsulated behind a .mapAndFilter() method, the internal implementation can be swapped out easily.

I've elaborated here: https://www.webpackbin.com/bins/-Klxe4iaXptF4a_WpFTq

But reduce is what you probably want to use - but there are libraries out there like lodash that will let you actually combine the operations so you only iterate once.

@Ryan,

Thanks for fleshing out your thoughts - much appreciated. I definitely like the idea of using .reduce(), as it allows for a single pass over the collection and keeps all the logic "explicit." But, I still wonder if there is a common-enough use-case for filtering to be applied based on the return value. Though, such a thing can always be done in an application-specific util/methods.

yeah, reduce() is built for this.

Hey Ben, a bit late to the party, just leaving this for posterity. As already mentioned the pattern you're describing is well covered with reduce. People unfortunately don't use that function as much as they use map and filter and tutorials introducing it usually showcase an accumulator that performs some mundane addition, so that's how people think of it. But reduce is actually more powerful than map or filter. The latter two are not necessary since you can derive their respective patterns just with the former. An accumulator can be anything. In this case, set it to an array and you have what you want.

oops forgot the parseInt in mapFunction, but whatever. you get the idea :-D

@Michael, @Eric,

I agreed,

.reduce()is pretty bad-ass. I should think about it more often. I only just recently realized that you can omit the initial value (the second argument) and the.reduce()function will automatically start with the first value in the collection as the initial accumulator. I don't have a lot of use-cases for that (outside of numeric operations); but, it just goes to show how little hands-on use I have with it.