Hello World: Concurrency In Node.js Using The Cluster Module

Lately, I've been feeling very blocked with some software architecture matters, so I've been re-reading Clean Code: A Handbook Of Agile Software Craftsmanship by [Uncle Bob] Robert C. Martin. One of the themes that keeps coming up in the book is that things that change for different reasons should be separated from each other. For example, in the chapter on Concurrency, Uncle Bob recommends:

The [Single Responsibility Principle] states that a given method/class/component should have a single reason to change. Concurrency design is complex enough to be a reason to change in it's own right and therefor deserves to be separated from the rest of the code. Unfortunately, it is all too common for concurrency implementation details to be embedded directly into other production code. Here are a few things to consider:

* Concurrency-related code has its own life cycle of development, change, and tuning.

* Concurrency-related code has its own challenges, which are different from and often more difficult than non-concurrency related code.

* The number of ways in which miswritten concurrency-based code can fail makes it challenging enough without the added burden of surrounding application code.

Recommendation: Keep your concurrency-related code separate from other code.

This got me thinking about Node.js' Cluster module. I've never actually used the Cluster module before; mostly I just help maintain existing Node.js code rather than write it from scratch. But, the other day, Kris Siegel brought it up on Twitter. And, being so mentally blocked on other matters, I thought it would be a be fun distraction to implement a Hello World app using Cluster.

When you execute a Node.js process, it runs in a single thread. This means that it only has access to a single core on the host machine. So, in order to take advantage of the multi-core power of modern machines, we actually need to run multiple Node.js processes. There are ways to do this outside of Node.js itself; but, Node.js provides a native way to accomplish this: the Cluster module.

When you use the Cluster module, the entry-point of the application gets executed multiple times: once for the Master process and once for each additional Worker process that is forked from the Master (Cluster uses child_process.fork() under the hood). While each Worker runs in its own isolated V8 process, the beauty of the Cluster module is that each Worker shares ports with Master. As such, each Worker can actually listen on the same port as the Master, which will [attempt to] evenly distribute requests to each Worker.

Now, going back to what Uncle Bob said above about isolating concurrency concerns, I don't want to define both my clustering (ie, concurrency) logic and my server logic in the same place. As such, I'm breaking this experiment up into two different files:

- cluster.js - This handles the Master / Working forking and concurrency logic.

- server.js - This handles the single-threaded Server logic.

Of course, we still need to work within the constraints of the Cluster module, which means that we do have to consume the Server logic from within the Cluster logic. Luckily, in Node.js, that's as easy as invoking require(). In the following Cluster code, notice that my ELSE branch - for Worker logic - does nothing but consume the server module:

// Require the core node modules.

var chalk = require( "chalk" );

var cluster = require( "cluster" );

var os = require( "os" );

// ----------------------------------------------------------------------------------- //

// ----------------------------------------------------------------------------------- //

// The cluster module in Node.js works by executing the application entry-point

// multiple and sharing the master ports with the worker ports. As such, this code

// needs to distinguish between the Master process and the forked Worker process(es).

if ( cluster.isMaster ) {

console.log( chalk.red( "[Cluster]" ), "Master process is now running.", process.pid );

// Since each Node.js process executes in a single thread, we want to create

// Workers based on the number of Cores available on the operating system. This

// way, we don't get Workers competing with each other for resources.

for ( var i = 0, coreCount = os.cpus().length ; i < coreCount ; i++ ) {

var worker = cluster.fork();

}

// When one of the Workers dies, the cluster will emit an "exit" event, which

// we can use to then spawn new Worker processes.

cluster.on(

"exit",

function handleExit( worker, code, signal ) {

console.log( chalk.yellow( "[Cluster]" ), "Worker has died.", worker.process.pid );

console.log( chalk.yellow( "[Cluster]" ), "Death was suicide:", worker.exitedAfterDisconnect );

// If a Worker was terminated accidentally (such as by an uncaught

// exception), then we can try to restart it.

if ( ! worker.exitedAfterDisconnect ) {

var worker = cluster.fork();

// CAUTION: If the Worker dies immediately, perhaps due to a bug in the

// code, you can run [from what I have READ] into rapid CPU consumption

// as Master continually tries to create new Workers.

}

}

);

} else {

// Any time we deal with concurrency, we want to separate out the concurrency code

// from the rest of the code. As such, we don't want to actually define out Worker

// logic here. Instead, we want to require it from a different module. This has the

// happy side-effect of allowing us to run the application in Cluster mode or in

// stand-alone mode by using different entry points (cluster.js vs. server.js).

require( "./server" );

console.log( chalk.red( "[Worker]" ), "Worker has started.", process.pid );

}

This code offers little more than the actual Node.js documentation, other than the fact that the Server logic is isolated from the Cluster logic. But, as you can see, we're forking as many Workers as there are cores in the host operating system. This way, concurrent requests to the application should, theoretically, be handled by different processing resources.

With this setup, our Server module - the actual application logic - doesn't need to know anything about our use of Cluster. Within the Server, we just setup an HTTP server the way we would normally. It just so happens that this module is sharing Ports with the Master process:

// Require the core node modules.

var http = require( "http" );

// ----------------------------------------------------------------------------------- //

// ----------------------------------------------------------------------------------- //

// Here, our Worker is running in a completely isolated V8 process - it doesn't share

// memory with the Cluster or with other Workers. The one notable exception is that it

// is allowed to the share PORTS with the Cluster. As such, each Worker will share

// port 8000 with the Cluster. Or, if this module was run as the application's entry

// point, it will just use port 8000 the same way it would without Clustering.

http

.createServer(

function handleRequest( request, response ) {

response.writeHead(

200,

{

"Content-Type": "text/html"

}

);

response.write( "Hello world from process " + process.pid + "." );

// In order to actually get the Cluster to distribute requests via the

// default Round Robin algorithm - FOR THE DEMO - I have to hold the

// request open so that I can generate concurrent requests to the Cluster.

setTimeout( response.end.bind( response ), 300 );

}

)

.listen( 8000 )

;

As you can see, there's nothing in this module that relates to the Clustering functionality at all (except for the setTimeout() used by the demo). Not only does this isolation maintain the separation of concerns, it has the happy side-effect of allowing me to execute this application with clustering:

node ./cluster.js

... or, without clustering, as a stand-alone process:

node ./server.js

This can make debugging much easier in a local environment, where tapping into a clustered set of processes is somewhat more complicated (though my experience with monitoring Node.js applications is limited).

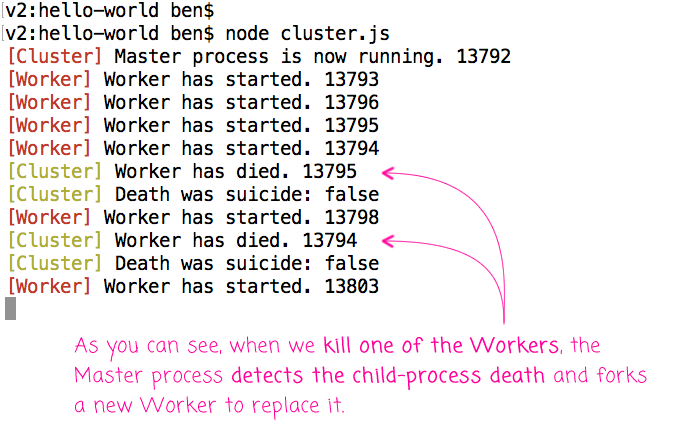

That said, if we run this application, using cluster.js as the entry point, we can see the Master and the Worker processes spin up in the terminal. And, if we go to force-quite a Worker process, we can see it get re-forked:

I've known about the Cluster module for a long time. But, in the early days, it wasn't a "Stable" module, so I never took the time to play around with it. Now that it's "Stable", it seems pretty cool. Of course, using Cluster in production would require more logic than what I have outlined here; but, generally speaking, Cluster seems to provide a lot of power without a lot of effort.

Want to use code from this post? Check out the license.

Reader Comments

I think another nice feature of this separation is that it makes it pretty easy switch between in-app clustering, and external clustering (using Kubernetes) or something similar to run a lot of single-threaded server processes), depending on how your deployment strategies evolve.

I like find it particularly interesting as a first step to scalability with docker:

You can start by using node clusters inside a single container, before you have to start clustering containers themselves via swarm or other orchestration tools.

Quick and easy way of squeezing some extra resources out of your low-key docker-based apps.

@Jesse,

Yes, very true. Or even moving the clustering to nginx (connecting to different ports) or something like pm2 - though to be transparent, I've never tried clustering with either of those technologies.

That said, I wonder if there are certain efficiencies to keeping the clustering logic closer to the code (as opposed to spinning up single-core servers)? The whole idea of letting a Kubernetes pod crash simply because one node process on it did something bad seems heavy-handed - I do like the idea of recovering the Worker thread without having to spawn a new pod (which I just have to assume is more expensive, time wise).

I'm sure someone has an article somewhere on the pros/cons of clustering at the different levels. I'll see if I can find a good one.

@Patrick,

And, if I understand what you are saying, it sounds like you can do it at both levels. Meaning, start off with clustering the cores in a single machine. Then, add pod clustering on top of that (when / if needed). I am not sure that one set of clustering has to replace the other - it seems that they should be able to work in conjunction with each other.

Hi, nice explanation!

Why do you even declare the `var worker`?

On the 1st time, it's in a `for loop` which will run over it agin and again and on the 2nd time, you run over an argument.

Thanks.

@Omer,

Good question. I have the "var" there for self-documentation of the code. If I just had a call to:

cluster.fork()

... then it wouldn't be obvious that the .fork() method returns a value (unless, of course, you have a strong understanding of the way the Cluster module works). By leaving in the "var", even though I don't use, it's an indicator that the .fork() method returns a value that you might want to know about.

But, certainly, removing it would not affect the code at all.

Great article. Very nicely sums up Node.js clustering. It's something people should be using for every Node.js server.

@Jake,

Thank you, good sir :)

@Ben,

PM2 allow you to launch your application with the nodejs cluster without having to add the whole logic. Its pretty simple, you just need to start your application with : `pm2 start app.js -i 2` where 2 is the number of worker.

For those who are reading this and are interested : http://pm2.keymetrics.io/docs/usage/cluster-mode/#usage

Late disclamer : i'm a pm2 maintainer :)

@Vmarchaud,

One of the things that is appealing to me about having the processes being managed outside of Node is that it seems -- from what I've read -- that you have more control over deploying new code. And, rather than stopping / starting the entire cluster, you can basically "roll in" new changes without down time. I know now every process manager has that built-in, but it looks like it is available on pm2:

http://strong-pm.io/compare/

Another thing that had not occurred to me is that when the system itself crashes, you need something to restart the process manager which will, in turn, restart the node process. Looks like pm2 also has that.

As an alternative to Cluster to resolving the issue of Node.js concurrency, you might want to take a look at QEWD. See

https://robtweed.wordpress.com/2017/04/18/having-your-node-js-cake-and-eating-it-too/

@Rob,

That sounds fairly interesting. I don't fully understand everything in the post, but it sounds interesting.

On a related note, I am having a very hard time trying to wrap my head around AWS Lambda. I totally get the idea of wanting to make some method / process scalable. But, I can't yet see how it call comes together in the context of a cohesive application. It seems like you'd have to have a bunch of message queues all coordinating various Lambda routines; but, I am sure I am missing something obvious as people seem to love Lambda.

@Ben,

Yes, I'm not sure how Lambda is used to construct complete applications either.

For more on QEWD working in practice, you might be interested in this:

https://github.com/robtweed/qewd-conduit

@Rob,

Thanks :)

@Vmarchaud,

Hey, any idea how is concurrency handled by PM2 in cluster mode. Also, can I add a process in runtime in noncluster mode.