Using ES6 Generators And Yield To Implement Asynchronous Workflows In JavaScript

CAUTION: This post is basically a "note to self" exploration.

One of the main reasons that I wanted to read Exploring ES6 by Dr. Axel Rauschmayer was that I needed to learn more about ES6 Generators. At work, some of the engineers have been using ES6 Generators to make asynchronous code look and feel like synchronous code. Which is super awesome; but it is, at the same time, a total mystery to my primordial ES5 brain. What I didn't realize, when reading that kind of code, was that the most important aspect of the code was completely hidden away. This post is an attempt to look at that "hidden" code as a means to gain a more holistic understanding of how ES6 generators can be used to manage asynchronous workflows.

On their own, ES6 generators aren't necessarily "asynchronous" in nature. They are just iterable. They are like little state machines that iterate over yield-delimited portions of the generator function body. This iteration can be performed synchronously or asynchronously, depending on the calling code; the generator doesn't care one way or the other.

For example, here's a generator function that is being consumed synchronously:

function* poem() {

yield( "Roses are red" );

yield( "Violets are blue" );

yield( "I'm a schizophrenic" );

yield( "And so am I" );

}

// By invoking the generator function, we are given a generator object, which we can

// use to iterate through the yield-delimited portions of the generator function body.

var iterator = poem();

// Invoke the first two iterations manually.

console.log( "== Start of Poem ==" );

console.log( iterator.next().value );

console.log( iterator.next().value );

// Invoke the next iterations implicitly with a for-loop.

// --

// NOTE: When we iterate with a for-of loop, we don't have to call `.value`. This is

// because the for-of loop is both calling .next() and binding the `.value` property to

// our iteration variable implicitly.

for ( var line of iterator ) {

console.log( line );

}

console.log( "== End of Poem ==" );

As you can see, we invoke the generator function to produce the generator object. We then synchronously iterate over the generator object both explicitly with calls to .next() and implicitly with a for-of loop. And, when we run this code in the terminal, we get the following output:

== Start of Poem ==

Roses are red

Violets are blue

I'm a schizophrenic

And so am I

== End of Poem ==

Notice that all of the console-logging appears in the terminal in the same order in which it was defined in the code. This is because all of this code executed synchronously; there's nothing implicitly asynchronous about generators.

If a generator function yields data structures - like Promises - that are asynchronous in nature, the generator is still synchronous; but, we definitely have to be more conscious about how we consume the yielded data. To demonstrate, let's take the above code and wrap each line in a Promise:

function* poem() {

yield( Promise.resolve( "Roses are red" ) );

yield( Promise.resolve( "Violets are blue" ) );

yield( Promise.resolve( "I'm a schizophrenic" ) );

yield( Promise.resolve( "And so am I" ) );

}

// By invoking the generator function, we are given a generator object, which we can

// use to iterate through the yield-delimited portions of the generator function body.

var iterator = poem();

console.log( "== Start of Poem ==" );

// In this version of the code, the generator function is yielding data that is

// asynchronous in nature (Promises). The generator itself is still synchronous; but, we

// now have to be more conscious of the type of data that it is yielding. In this demo,

// since we're dealing with promises, we have to wait until all the promises have

// resolved before we can output the poem.

Promise

.all( [ ...iterator ] ) // Convert iterator to an array or yielded promises.

.then(

function handleResolve( lines ) {

for ( var line of lines ) {

console.log( line );

}

}

)

;

console.log( "== End of Poem ==" );

Here's we're still iterating over the generator in a synchronous manner, using the spread-operator to convert it to an array of Promises. But, we understand that the yielded data is asynchronous in nature; so, we wait for all of the lines of poetry to resolve before outputting the result. And, when we run the above code, we get the following terminal output:

== Start of Poem ==

== End of Poem ==

Roses are red

Violets are blue

I'm a schizophrenic

And so am I

As you can see, the console-logging appears in a different order in the terminal because the consuming code had to wait for the promises to resolve asynchronously before all of the logging could be completed.

In the above code, we're allowing each yielded promise to resolve in parallel. And already, the code is a good deal more complex than our original version. If we wanted the promises to resolve in serial - meaning one after another - that's where things get especially complicated.

In order to take a generator object that iterates over asynchronous data and have each step run in serial, we have to examine each yielded value, wait for it to resolve (in the case of Promises), and then take the resolved-value and pass it back into the next step of the generator's iteration.

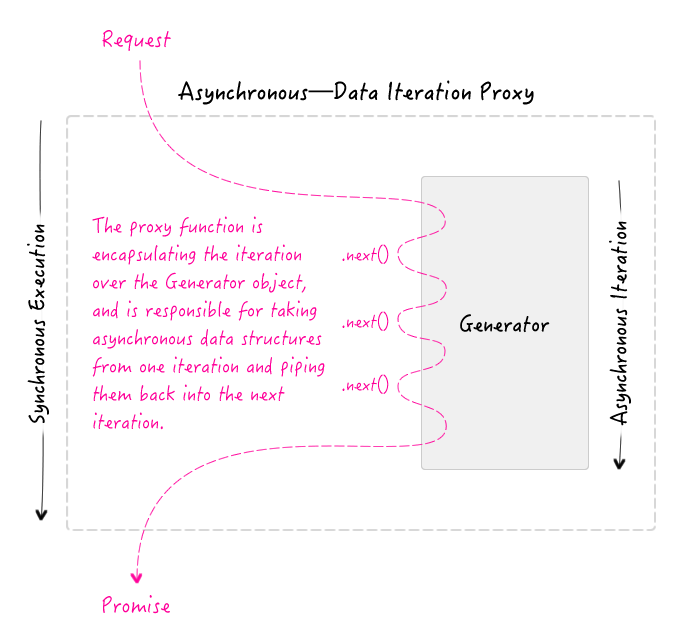

Here, we have some proxy function that synchronously receives a request and returns a promise of a final result. Internally, it then iterates over a generator that is yielding asynchronous data structures. In most of the ES6 Generator code that I've read, this asynchronous control-flow is completely hidden behind methods like Bluebird.coroutine(). This reduces the complexity of consumption; but, when you're trying to learn about how this all works, this encapsulation makes it much more difficult to understand.

In order to bring transparency to the asynchronous flow of control, and to try and wrap my head around it, I wanted to put together a demo that includes a simplified version of one of these "coroutine" proxies. In the following code, we have a generator function that yields promises:

// I am a generator. Such steps! Much iteration! So confusion!

function* getUserFriendsGenerator( id ) {

var user = yield( getUser( id ) );

var friends = yield( getFriends( id ) );

return( [ user, friends ] );

}

We're going to proxy the iteration through this function body such that we can return a single promise that represents the serial asynchronous execution of this code. But, before we do, it's important to understand the dual-nature of "yield": it produces a value and pauses execution; but, at the same time, it also provides a placeholder for the input to the next iteration. In a sense, a single yield acts as both an output and an input.

If we look at this line of code:

var a = yield( b );

... the first call to .next() will result in an InterationResult whose value is "b" (yield as output). This code will then pause on the yield, waiting for us to call .next(). And, when we do call .next(), we can then pass-in a value which will replace the "yield" (yield as input), storing the value into the variable "a".

In the following code, my simplified version of this coroutine logic is as the bottom:

// I get the user with the given Id. Returns a promise.

function getUser( id ) {

var promise = Promise.resolve({

id: id,

name: "Sarah"

});

return( promise );

}

// I get the friends for the user with the given Id. Returns a promise.

function getFriends( userID ) {

if ( ! userID ) {

throw( new Error( "InvalidArgument" ) );

}

var promise = Promise.resolve([

{

id: 201,

name: "Joanna"

},

{

id: 301,

name: "Tricia"

}

]);

return( promise );

}

// ----------------------------------------------------------------------------------- //

// ----------------------------------------------------------------------------------- //

// I am a generator function. Such steps! Much iteration! So confusion!

function* getUserFriendsGenerator( id ) {

var user = yield( getUser( id ) );

var friends = yield( getFriends( id ) );

return( [ user, friends ] );

}

// I get the user and friends for the user with the given ID. Returns a promise.

function getUserFriends( id ) {

// Here, we are taking the generator function and wrapping it in an iteration

// proxy. The iteration proxy is capable of synchronously returning a promise

// while internally iterating over the resultant generator object asynchronously.

var workflowProxy = createPromiseWorkflow( getUserFriendsGenerator );

return( workflowProxy( id ) );

}

// Let's call our method that is running as a generator-proxy internally.

getUserFriends( 4 ).then(

function handleResult( value ) {

console.log( "getUserFriends() -- Result:" );

console.log( JSON.stringify( value, null, 2 ) );

},

function handleReject( reason ) {

console.log( "getUserFriends() -- Error:" );

console.log( reason );

}

);

// ----------------------------------------------------------------------------------- //

// ----------------------------------------------------------------------------------- //

// On its own, a Generator Function produces a generator, which is just a function

// that can be executed, in steps, as an iterator; it doesn't have any implicit promise

// functionality. However, if a generator happens to yields promises during iteration,

// we can wrap that generator in a proxy and let the proxy pipe yielded values back

// into the next iteration of the generator. In this manner, the proxy can manage an

// internal promise chain that ultimately manifests as a single promise returned by

// the proxy.

function createPromiseWorkflow( generatorFunction ) {

// Return the proxy that is now lexically-bound to the generator function.

return( iterationProxy );

// I proxy the generator and "reduce" its iteration values down to a single value,

// represented by a promise. Returns a promise.

function iterationProxy() {

// When we call the generator function, the body of the generator is NOT

// executed. Instead, an iterator is returned that can iterate over the

// segments of the generator body, delineated by yield statements.

var iterator = generatorFunction.apply( this, arguments );

// function* () {

// var a = yield( getA() ); // (1)

// var b = yield( getB() ); // (2)

// return( [ a, b ] ); // (3)

// }

// When we initiate the iteration, we need to catch any errors that may occur

// before the first "yield". Such an error will short-circuit the process and

// result in a rejected promise.

try {

// When we call .next() here, we are kicking off the iteration of the

// generator produced by our generator function. The function will start

// executing and run until it hits the first "yield" statement (1), which

// will return, as its result, the value supplied to the "yield" statement.

// The .next() result will look like this:

// --

// {

// done: false,

// value: getA() // Passed to "yield"; may or may not be a Promise.

// }

// --

// We then pipe this result back into the next iteration of the generator.

return( pipeResultBackIntoGenerator( iterator.next() ) );

} catch ( error ) {

return( Promise.reject( error ) );

}

// I take the given iterator result, extract the value, and pipe it back into

// the next iteration. Returns a promise.

// --

// NOTE: This function calls itself recursively, building up a promise-chain

// that represents each generator iteration step.

function pipeResultBackIntoGenerator( iteratorResult ) {

if ( iteratorResult.done ) {

// If the generator is done iterating through its function body, we can

// return one final promise of the value that was returned from the

// generator function (3). The iteratorResult would look like this:

// --

// {

// done: true,

// value: [ a, b ]

// }

// --

// So, our return() statement here really is doing this:

// --

// return( Promise.resolve( [ a, b ] ) ); // (3)

return( Promise.resolve( iteratorResult.value ) );

}

// If the generator is NOT DONE iterating through its function body, we need

// to bridge the gap between the yields. We can do this by turning each step

// into a promise that can build on itself recursively.

var intermediaryPromise = Promise

// Normalize the value returned by the iterator in order to ensure that

// its a promise (so that we know it is "thenable").

.resolve( iteratorResult.value )

.then(

function handleResolve( value ) {

// Once the promise has returned with a value, we need to

// pipe that value back into the generator function, which is

// currently paused on a "yield" statement. When we call

// .next( value ) here, we are replacing the currently-paused

// "yield" with the given "value", and resuming the iteration.

// Essentially, this pre-yielded statement:

// --

// var a = yield( getA() ); // (1)

// --

// ... becomes this after we call .next( value ):

// --

// var a = value; // (1)

// --

// At this point, the generator function continues its execution

// until the next yield; or until it hits the a return (implicit

// or explicit).

return( pipeResultBackIntoGenerator( iterator.next( value ) ) );

// CAUTION: If iterator.next() throws an error that is not

// handled by the generator, it will cause an exception inside

// this resolution handler, which will cause the promise to be

// rejected.

},

function handleReject( reason ) {

// If the promise value from the previous step results in a

// rejection, we need to pipe that rejection back into the

// generator where the generator may or may not be able to handle

// it gracefully. When we call iterator.throw(), we resume the

// generator function with an error. If the generator function

// doesn't catch this error, it will bubble up right here and

// cause an error inside the of handleReject() function (which

// will lead to a rejected promise). However, if the generator

// function catches the error and returns a value, that value

// will be wrapped in an iterator result and piped back into the

// generator.

return( pipeResultBackIntoGenerator( iterator.throw( reason ) ) );

}

)

;

return( intermediaryPromise );

}

}

}

As you can see, this is complicated stuff, so I tried to leave a lot of comments in the actual code. Internally, the iteration proxy kicks off the iteration of the generator in a recursive function that builds a promise-chain. The promise-chain is allowed to build up asynchronously while the base promise is returned synchronously from the proxy invocation.

When we run the above code, we get the following terminal output:

getUserFriends() -- Result:

[

{

"id": 4,

"name": "Sarah"

},

[

{

"id": 201,

"name": "Joanna"

},

{

"id": 301,

"name": "Tricia"

}

]

]

As you can see, the "user" and the "friends" were both gathered asynchronously, in serial.

Once you see the complexity involved in managing the asynchronous iteration of the generator object, you can understand why it's encapsulated. But, when you're first trying to learn about generator functions and how generator iteration works, this encapsulation can make things much more confusing and overly magical. For me, pulling the curtain back, so to speak, makes it possible the follow the logic and build up a proper mental model. And, once I have the right mental model, I can comfortably welcome the encapsulation back into my asynchronous life.

Want to use code from this post? Check out the license.

Reader Comments

@All,

As a quick follow-up to this post, I wanted to take a look at performing parallel data-access inside a workflow-driven generator:

www.bennadel.com/blog/3124-gathering-data-in-parallel-inside-an-asynchronous-generator-based-workflow-in-javascript.htm

I think when we see this kind of yield-based workflow, its going to be very easy to forget or *overlook* that some data can be accessed in parallel. This follow-up post just looks at how tasks that can be run in parallel can be done inside the generator-based workflow so as to leverage the beautiful asynchronous nature of JavaScript.

What is the point using generators, when result is promise? Why not just use promises here?

@Finch,

If you wanted to, you could totally use promises. And, the generator-based workflow we're using is actually just using promises under the hood (in conjunction with the new "yield" syntax). The difference is really just the look and feel of the code itself. The generator-based workflow gets your code looking a bit more like Synchronous code in a blocking language.

Something that might look like this:

var promise = Q.fcall(

. . function() {

. .

. . . . var a = getAsyncA();

. . . . var b = getAsyncB();

. . . .

. . . . return( Q.all([ a, b ]) );

. .

. . }

)

.spread(

. . function( a, b ) {

. .

. . . . return( a + b )

. .

. . }

);

... could end up being reduced to something like this:

var promise = workflow(

. . function* () {

. .

. . . . var a = yield( getAsyncA() );

. . . . var b = yield( getAsyncB() );

. . . .

. . . . return( a + b );

. .

. . }

);

... functionally, this code is basically the same. But, syntactically, the generator-based workflow is a little more concise and a little more like a blocking, synchronous language.

But, it's just personal preference - one version of the code isn't objectively better. The latter version clearly has more "magic" baked-in, which does make it harder to follow (while ironically perhaps easier to understand).

Very useful.. Thank you..

I noticed that this does not handle nested yields. The work around is not to yield within a generator function but instead use this generator proxy wrapper to call it as a function.

I wonder if this this wrapper could check for iterator return values then returned a recursive invocation of itself.