Experimenting With The Developer Ergonomics Of Data Access Using Parallel Struct Iteration In Lucee 5.3.2.77

Last week, I discovered that Lucee 5 has the ability to iterate over data structures using parallel threads. This feature opens up the possibility of gathering data using asynchronous, parallel queries, something that is common place in the JavaScript / Node.js world but has never really been easy in ColdFusion. As a quick follow-up post, I wanted to experiment with some different approaches to encapsulation in order to see how I might be able to create enjoyable developer ergonomics.

To quickly recap, Lucee can run all of its data structure iteration methods using parallel threads. This includes, Struct, Array, Query, and List iteration (and pertains to both looping and mapping). Here's an example of Array iteration being used to facilitate parallel data access:

var userIDs = [ 1, 2, 3, 4 ];

// Map the userIDs on to user records using parallel threads. This allows us to run the

// queries in parallel rather than in series.

var users = userIDs.map(

function( userID ) {

return( dataAccess.getUserByID( userID ) );

},

true // Use parallel threads for iteration.

);

Mapping a list of IDs onto a list of records is a nice example; but, it doesn't really represent the majority of data-access scenarios that I deal with. Mostly, what I would like is a way to run arbitrary queries in parallel while collecting the data that I need to render a particular View or process a given Command.

The approach that seemed most obvious to me was to use Struct mapping so that I could clearly name the individual values that I was trying to aggregate in parallel. Roughly speaking, I wanted code that looked something like this:

var results = runInParallel({

taskOne: taskOneFunction,

taskTwo: taskTwoFunction,

taskThree: taskThreeFunction,

taskFour: taskFourFunction

});

// Pluck items out of parallel results collection.

var taskOne = results.taskOne;

var taskTwo = results.taskTwo;

var taskThree = results.taskThree;

var taskFour = results.taskFour;

By using Struct mapping, I could clearly see the name of the data items that I was generating; and then, I could easily pluck those named items right out of the resultant collection.

Of course, using a naked Function reference to define the Task implementation is quite limiting since the Function doesn't receive any arguments. As such, I started to play around with different ways to define each Struct-Value such that it could leverage either closed-over values or receive some defined set of arguments.

Here's what I came up with. In the following ColdFusion component, the first three "getData" methods demonstrate the various ways in which I am invoking Struct mapping in order to facilitate parallel data access. Then, the methods below it provide the implementation details for said mapping.

component

output = false

hint = "I demonstrate various ways to use parallel Struct iteration in Lucee in order to facilitate parallel data access / query execution."

{

/**

* I explore some developer ergonomics of gathering data using parallel struct

* iteration in Lucee.

*/

public void function getData( required numeric userID ) {

var user = getUserByID( userID );

// Now that we know that we have a valid user, we can gather more detailed

// information in parallel. The following task all depend on the user result, but

// are independent of each other (in terms of inputs).

// --

// In this version, each task is defined a Function that contains the task logic.

var chunk = runInParallel({

subscription: function() {

return( getSubscription( user.id ) );

},

teamMembers: function() {

return( getTeamMembers( user.id ) );

},

projects: function() {

return( getProjects( user.id ) );

}

});

// Pluck the calculated values out of the parallel results chunk.

var subscription = chunk.subscription;

var teamMembers = chunk.teamMembers;

var projects = chunk.projects;

writeDump(

label = "APPROACH: Using inline closures.",

var = {

subscription: subscription,

teamMembers: teamMembers,

projects: projects

}

);

}

/**

* I explore some developer ergonomics of gathering data using parallel struct

* iteration in Lucee.

*/

public void function getData2( required numeric userID ) {

var user = getUserByID( userID );

// Now that we know that we have a valid user, we can gather more detailed

// information in parallel. The following task all depend on the user result, but

// are independent of each other (in terms of inputs).

// --

// In this version, each task is defined an array in which the first item is the

// task Function that contains the task logic; and, the second item is the

// argument collection that is used to invoke the task Function.

var chunk = runInParallel({

subscription: [ getSubscription, [ user.id ] ],

teamMembers: [ getTeamMembers, [ user.id ] ],

projects: [ getProjects, [ user.id ] ]

});

// Pluck the calculated values out of the parallel results chunk.

var subscription = chunk.subscription;

var teamMembers = chunk.teamMembers;

var projects = chunk.projects;

writeDump(

label = "APPROACH: Using arrays of Function / Arguments.",

var = {

subscription: subscription,

teamMembers: teamMembers,

projects: projects

}

);

}

/**

* I explore some developer ergonomics of gathering data using parallel struct

* iteration in Lucee.

*/

public void function getData3( required numeric userID ) {

var user = getUserByID( userID );

// Now that we know that we have a valid user, we can gather more detailed

// information in parallel. The following task all depend on the user result, but

// are independent of each other (in terms of inputs).

// --

// In this version, each task is defined as a Function that contains the task

// logic. And, each task is invoked with the given set of arguments. In this

// case, the function signatures must use NAMED PARAMETERS in order to pluck out

// the correct subset of invoke arguments, since each Function receives the same

// set of arguments.

var chunk = runInParallelWithArgs(

{

userID: user.id

},

{

subscription: getSubscription,

teamMembers: getTeamMembers,

projects: getProjects

}

);

// Pluck the calculated values out of the parallel results chunk.

var subscription = chunk.subscription;

var teamMembers = chunk.teamMembers;

var projects = chunk.projects;

writeDump(

label = "APPROACH: Using Function references and shared Arguments.",

var = {

subscription: subscription,

teamMembers: teamMembers,

projects: projects

}

);

}

// ---

// TASK INVOCATION METHODS.

// ---

/**

* I execute the given task collection in parallel threads and return the mapped

* results. Each struct value is assumed to be a Function that contains the task

* logic; or, an array in which the first item is the task Function and the second

* item contains the task arguments:

*

* key: function

* key: [ function, arguments ]

*

* @tasks I am the collection of tasks to run in parallel.

* @output false

*/

private struct function runInParallel( required struct tasks ) {

return( runInParallelWithArgs( {}, tasks ) );

}

/**

* I execute the given task collection in parallel threads and return the mapped

* results. Each struct value is assumed to be a Function that contains the task

* logic; or, an array in which the first item is the task Function and the second

* item contains the task arguments:

*

* key: function

* key: [ function, arguments ]

*

* If the value is a plain Function, it is invoked with the given set of task

* arguments.

*

* @taskArguments I am the argument collection to pass to each task operation.

* @tasks I am the collection of tasks to run in parallel.

* @output false

*/

private struct function runInParallelWithArgs(

required struct taskArguments,

required struct tasks

) {

var results = tasks.map(

function( taskName, taskOperation ) {

// If the operation is defined as an Array, then the first item is the

// task operation and the second item is the task arguments:

// --

// value: [ taskOperation, taskArguments ]

// --

// This approach overrides the taskArguments provided by the parent

// function, passing in the arguments provided by the array.

if ( isArray( taskOperation ) ) {

var invokeArguments = taskOperation[ 2 ];

var invokeOperation = taskOperation[ 1 ];

// If the operation is defined as a Function, then we're going to use the

// taskArguments provided by the parent function.

} else {

var invokeArguments = taskArguments;

var invokeOperation = taskOperation;

}

return( invokeOperation( argumentCollection = invokeArguments ) );

},

true // Perform struct mapping in parallel threads.

);

// TIP FROM BRAD WOOD

// ==================

// If you ever want to constraint the number of parallel threads to match the

// number of cores that your server has, you can introspect the runtime to get

// this value; then, you can pass this value in as a third argument to your

// parallel method (default parallel thread count is 20).

// ---

// createObject( "java", "java.lang.Runtime" ).getRuntime().availableProcessors()

return( results );

}

// ---

// DEMO DATA METHODS.

// ---

public array function getProjects( required numeric userID ) {

return([

{ id: 15, userID: userID, name: "My project!" },

{ id: 749, userID: userID, name: "My other project!" }

]);

}

public struct function getUserByID( required numeric userID ) {

return({ id: userID, name: "Kim Smith" });

}

public struct function getSubscription( required numeric userID ) {

return({ id: 1001, planType: "Pro", price: 9.99, userID: userID });

}

public array function getTeamMembers( required numeric userID ) {

return([

{ memberID: 99, leadID: userID },

{ memberID: 205, leadID: userID }

]);

}

}

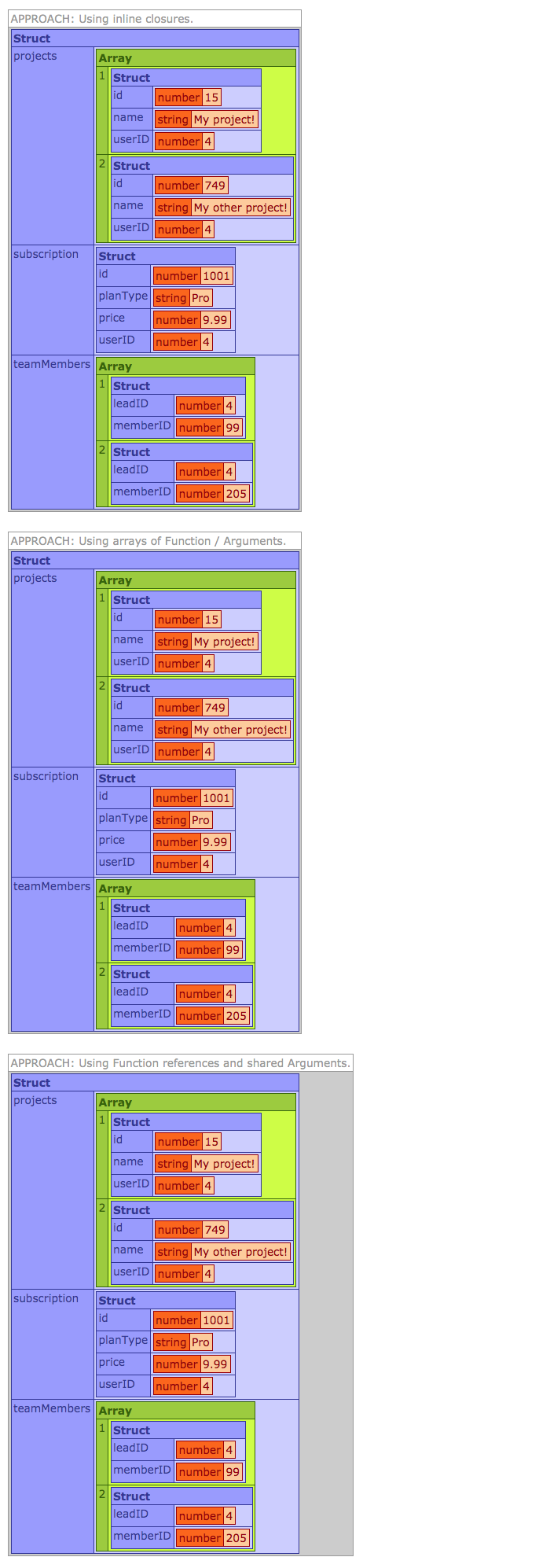

As you can see, my runInParallel() and runInParallelWithArgs() methods provide three different ways to define the ColdFusion Struct that we're using to implement parallel data access. And, if we run this, we can see that all three methods provide the same exact results:

I'm pretty excited about this stuff! I think that this approach provides an enjoyable developer experience; and, I hope that running tasks in parallel provides a performance boost for the end-user. That said, I am currently running a small experiment in a production application, and the results are not mind-blowing. Of course, I will report on that later when I have more data. For now, I'm just happy with the current developer ergonomics that I can create around parallel data access.

Want to use code from this post? Check out the license.

Reader Comments

Thanks as always for the efforts, Ben. Seeing THAT/HOW this is possible is indeed interesting. I think for many, though, the real challenge will be knowing WHEN/WHERE/WHY to use it--or even more simply traditional cfthread/thread, or the new runasyc in CF2018.

I just wanted to share a couple more thoughts for folks who may find or be directed to this series, in hopes of performance wins. As your next post in the series shows, that's not always going to come easily.

Still, as people grow comfortable with the ability to parallelize processes, any of these various ways, then they may be better able to identify potential use cases. So the first meta step is indeed learning what's possible.

Then once some "suspect" code is identified, they'll need to confirm that some set of synchronous operations really show slowness that could be possibly improved with threading/parallel access. Your next post shows you tracing performance with NR, and of course it's also possible with FR via frapi, and even in cfml (cftrace/trace, cflog/write log, cftimer/timer) all with their own pros and cons.

All this may be obvious to some, but I help people with troubleshooting daily, and features like these newer ones are enticing to many--like honey to a bear-- hoping it may solve a problem. I'm just stressing that one first has to confirm the problem first before trying such features.

Then as you show in that next post, confirm whether it really helps. As you show, sometimes such things not only don't help but they hurt. It's all about measurement, so again thanks for all you're sharing, especially that.

I'll say finally that in my experience such rather micro optimizations are nearly always secondary in win percentage to identification/resolution of other more fundamental problems, whether elsewhere in a given request, or perhaps unexpectedly in the application.cf* files, or about configuration issues in cf/lucee or the jvm, or about holdups in the services being called from cfml (db, cfhttp, etc.), or perhaps even the web server or web server connector, and so on, to name a few common culprits.

Again, perhaps obvious to some but since these are what are found to be the causes and solutions of nearly every problem presented to me, I just leave that for folks to consider strongly before considering coding changes. I'm sure Ben and the Invison folks do. I'm speaking more to most folks, from my experience.

Most importantly: measure, measure, measure/monitor, before and after a change. What may seem should be an obvious win could prove surprisingly elusive--and the real problem/solution could be missed by skipping/skimping on the measurement and iterative testing steps. Good on ya, Ben, for showing that next.

Hope that's helpful to some.

@Charlie,

1000% correct! And, for others, here is the post that Charlie is referring to:

www.bennadel.com/blog/3666-performance-case-study-parallel-data-access-using-parallel-struct-iteration-in-lucee-5-2-9-40.htm

... which shows that using these asynchronous control flows add complexity to the code and don't necessarily have a significant performance benefit.

Measuring everything has become the most powerful tool in my toolbox. That, in conjunction with incremental rollout with feature-flags really helps us identify bottlenecks and then see if change that we are making actually have any impactful outcome.

It's like our old VP of Data Services (Brad Brewer) used to say: "Chasing queries in the slow-query log is almost always going to be solving the wrong problem." .... his point being that the real problems aren't being addressed (such as the larger workflows and data-access patterns). And, that fixing slow queries can often make the long-term support of the Application harder because it is patching-over the "real problems".

All to say, your statement:

is spot on!

Thanks, Ben.